DataStax has been partnering with Pivotal to provide a real-time data platforms at cloud-scale for customers building customer 360 real-time applications and large-scale microservices on the Pivotal Cloud Foundry (PCF) application platform. We have been proactively working with Pivotal to explore our next generation integration with Pivotal Container Service (PKS). To this end, we have developed a set of Kubernetes yaml files which deploy DataStax Enterprise inside a Kubernetes cluster managed by PKS and building our next generation broker integration via their new service broker called Kibosh. This post will provide background on why Datastax created a tool to help you manage your containerized DSE environment on PKS and a guide to getting this setup in your PKS environment.

DataStax has been partnering with Pivotal to provide a real-time data platforms at cloud-scale for customers building customer 360 real-time applications and large-scale microservices on the Pivotal Cloud Foundry (PCF) application platform. With our two existing beta broker integrations, namely DataStax Enterprise for PCF Service Broker and DataStax Enterprise for PCF, customers can connect their applications running in Pivotal Cloud Foundry to an existing DataStax Enterprise (DSE) cluster or to a dynamically provisioned DataStax Enterprise managed by Cloud Foundry BOSH. We have been receiving great feedback from our user community.

Since Pivotal Container Service (PKS) went General Availability earlier this year, we have been proactively working with Pivotal to explore our next generation integration with Pivotal Container Service (PKS). To this end, we have developed a set of Kubernetes yaml files which deploy DataStax Enterprise inside a Kubernetes cluster managed by PKS and building our next generation broker integration via their new service broker called Kibosh. It is an open service broker that, when asked to create a data service, will deploy a Helm chart for deploying a DataStax Enterprise cluster on to PKS. You can learn more about this broker integration solution from Pivotal in this article. As a provider of data services on the PCF platform, we will provide an installable tile on PCF marketplace to allow customers to deploy DSE in a PKS environment in a very robust and consistent manner. This allows developers to ubiquitously consume DSE as their real-time data platform within Pivotal Cloud Foundry’s paradigm.

This post will provide background on why Datastax created a tool to help you manage your containerized DSE environment on PKS and a guide to getting this setup in your PKS environment.

Benefits of running DataStax Enterprise on Kubernetes

Cloud applications are required to be contextual, always-on, real-time, highly distributed, and extremely scalable. Today, data layers like Datastax are being deployed to bare metal servers for performance considerations, but with the advances in cloud-native technology, there can be considerable advantages to running database infrastructure in containers.

Kubernetes provides many unprecedented advantages over traditional deployment lifecycle management solution to support cloud application characteristics through self-healing capabilities, elasticity and efficient resource management. Kubernetes together with DataStax Enterprise, the always-on, distributed cloud database designed for hybrid cloud, allows customers to focus on developing their most innovative products and services and stay competitive domestically and globally in the ever-changing business environments.

The flexible nature of both Kubernetes and DataStax Enterprise enables customers to build a truly hybrid cloud deployment for data locality, data governance, security and other industry-specific compliance requirements. The Kubernetes community has extended their support for stateful workloads such as Datastax Enterprise through a StatefulSet controller to preserve pod’s identity and data persistence across restarts. The community has a strong commitment to continue to improve the life cycle of stateful workloads inside Kubernetes as stateful workloads are increasingly playing a more critical role in any cloud native applications and microservices architecture running on Kubernetes.

TestDrive: Running DataStax Enterprise (DSE) on Pivotal Container Service (PKS) in Google Cloud Platform (GCP)

Before our next generation tile based on Kibosh is available for trial, you can experience running DSE in a PKS Kubernetes cluster today using our Kubernetes yaml files for development and testing purposes. Below we will show you the steps to deploy a DSE cluster in an existing PKS Kubernetes cluster.

Prerequisites:

- pks CLI tool is available on your machine to access your PKS cluster environment.

- Credentials such as PKS_API, USERNAME and PASSWORD to run “pks login” command to log in to the PKS CLI.

- Tools including wget and kubectl are installed on your machine. Ensure the kubectl’s client version is compatible with your PKS K8 cluster version.

Log in to your PKS Kubernetes cluster environment:

- Run

$ pks login -a -u -p -k - Run

$ pks clusters(to locate your PKS K8 cluster) - Run

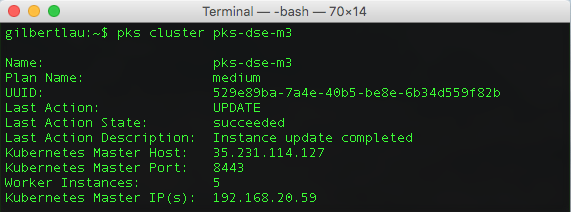

$ pks cluster(to ensure you have at least 4 worker instances to run our default DSE yaml configurations). The output should look similar to the following:

- Run

$ pks get-credentials(to target kubectl to your PKS K8 cluster) - Run

$ kubectl get nodes -o wide(to confirm you are connected to the PKS K8 cluster)

Deploy DSE in your PKS Kubernetes cluster:

- Create required configmaps for DSE and OpsCenter Statefulsets

$ git clone https://github.com/DSPN/kubernetes-dse

$ cd kubernetes-dse

$ kubectl create configmap dse-config --from-file=common/dse/conf-dir/resources/cassandra/conf --from-file=common/dse/conf-dir/resources/dse/conf

$ kubectl create configmap opsc-config --from-file=common/opscenter/conf-dir/agent/conf --from-file=common/opscenter/conf-dir/conf --from-file=common/opscenter/conf-dir/conf/event-plugins

$ kubectl create configmap opsc-ssl-config --from-file=common/opscenter/conf-dir/conf/ssl - Create OpsCenter admin’s password using K8 Secret

$ kubectl apply -f common/secrets/opsc-secrets.yaml - Deploy the yaml set to instantiate DSE and OpsCenter

$ kubectl apply -f gke/dse-suite.yaml - Run

$ kubectl get all(to view the status of your deployment) - Run

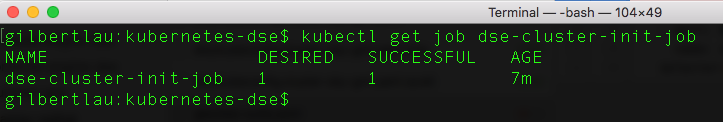

$ kubectl get job dse-cluster-init-job(to view if the status of dse-cluster-init-job has successfully completed. It generally takes about 10 minutes to spin up a 3-node DSE cluster.)

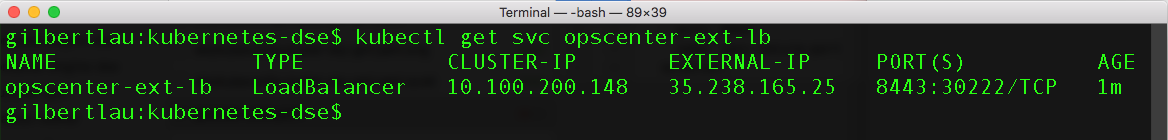

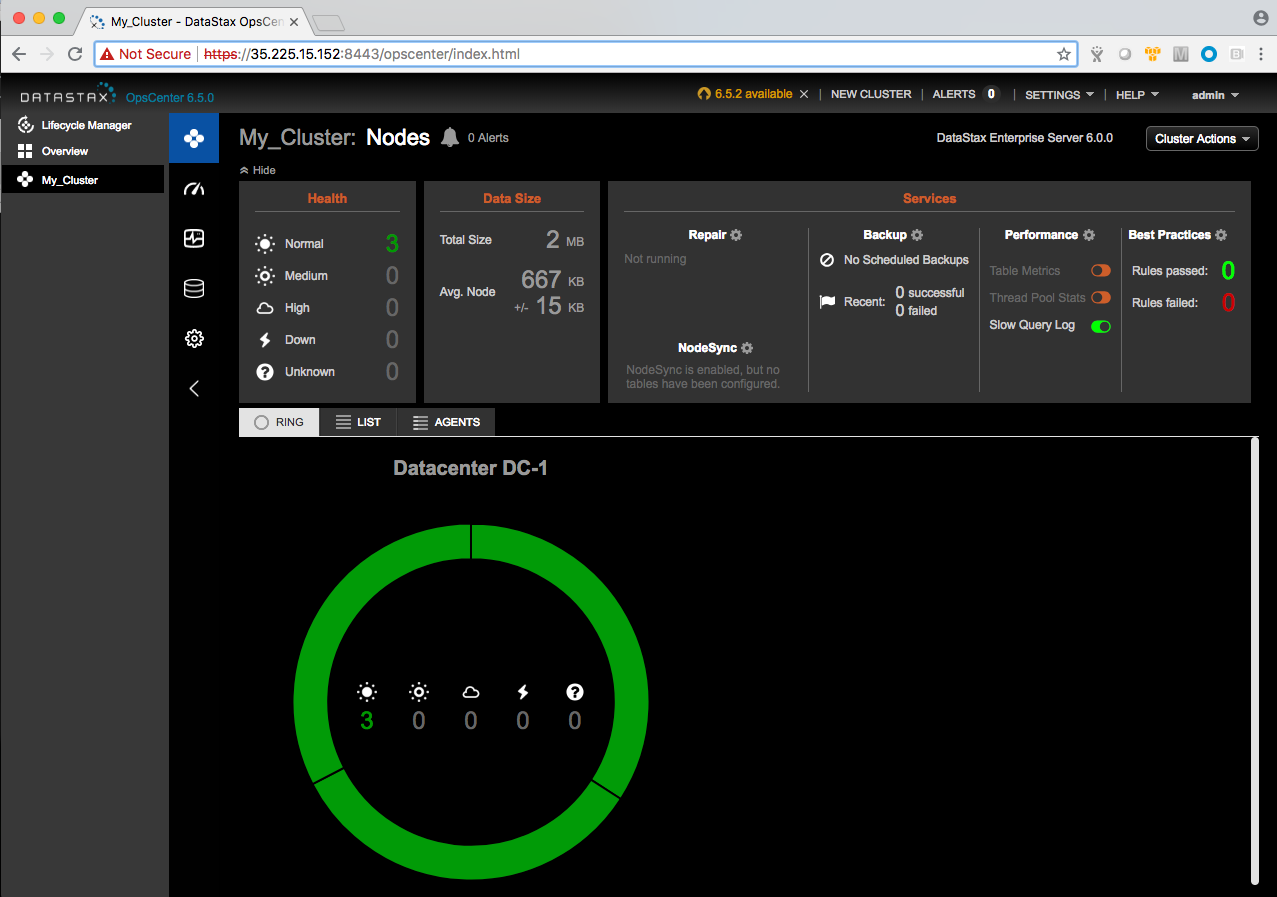

- Once complete, you can access the DataStax Enterprise OpsCenter web console to view the newly created DSE cluster by pointing your browser at https://:8443 with Username: admin and Password: datastax1!

Next steps

If you have any feedback, you can email us at partner-architecture@datastax.com . What’s more is to go to DataStax Academy, our online learning portal full of introductory, intermediate, and advanced level courses on DataStax Enterprise.

If you want to talk to us live, come to SpringOne Platform between September 24-27 in Washington D.C. We will share with you an update on our latest integration. Stay tuned for future blogs on our Kibosh integration update.