What is a vector database?

A vector database is a database designed to store and query high-dimensional vectors, aiding in AI processes by enabling vector search capabilities.

Vector databases offer a specialized solution for managing high-dimensional data, essential for AI's contextual decision-making. But how exactly do they accomplish this?

A vector database is a specialized storage system designed to efficiently handle and query high-dimensional vector data, commonly used in AI and machine learning applications for fast and accurate data retrieval.

With the rapid adoption and innovation happening around large language models we need the ability to take large amounts of data, contextualize it, process it, and search it with meaning. Generative AI processes and applications rely on the ability to access vector embeddings, a data type that provides the semantics necessary for AI to have long-term memory processing.

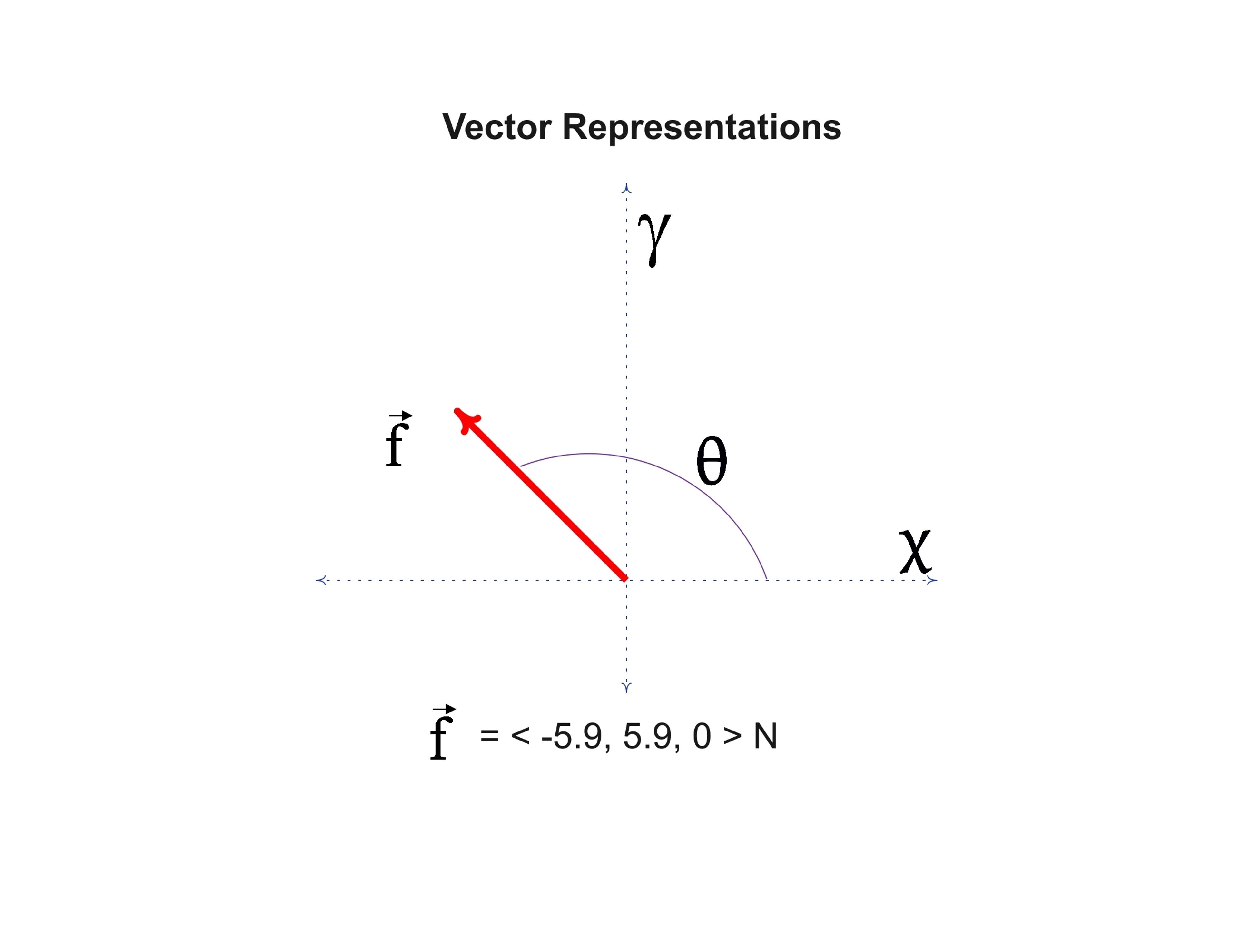

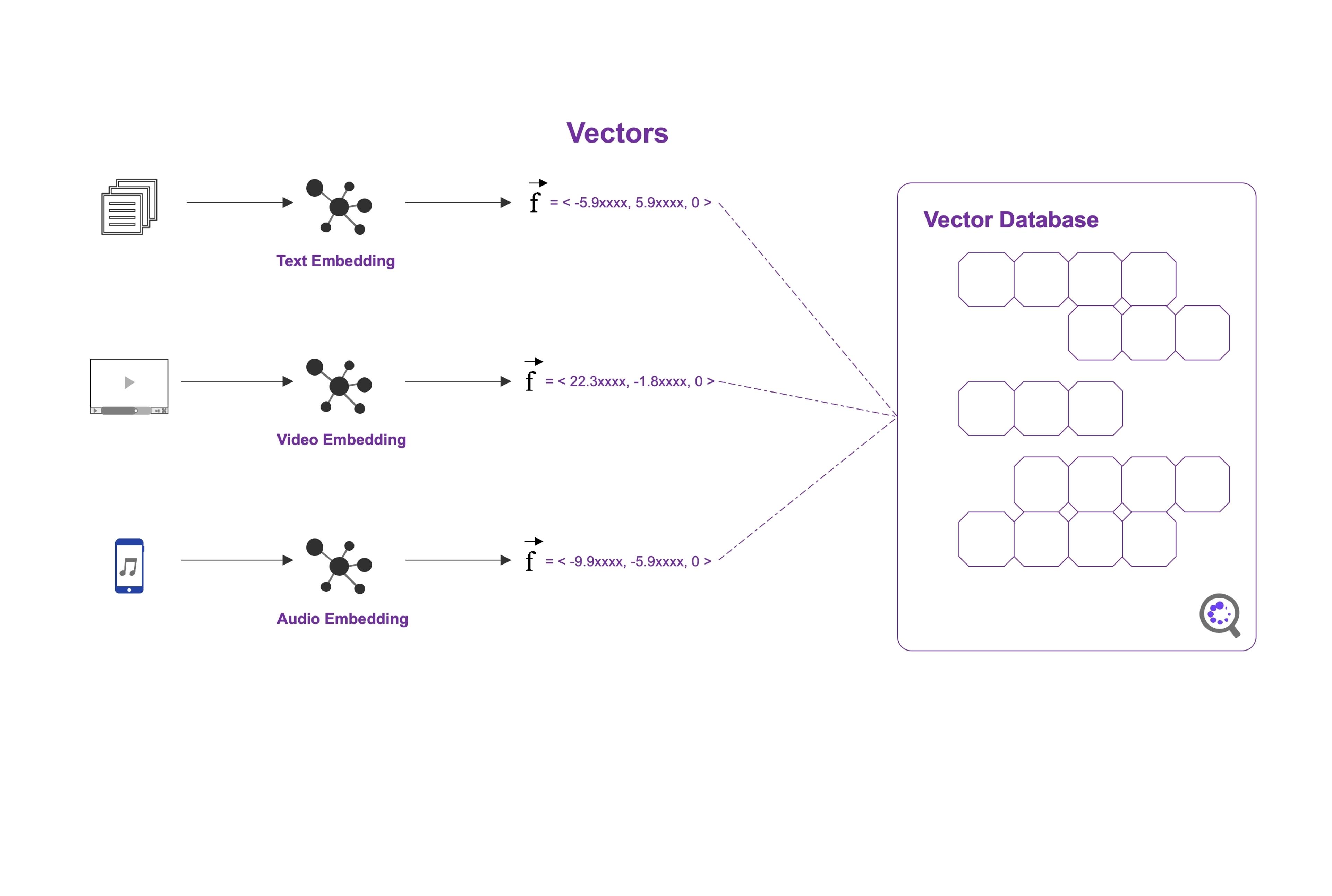

Vector embeddings are the data representation that AI models (such as large language models) use and generate to make complex decisions. Like memories in the human brain, they have complexity, dimension, patterns, and relationships.

We need to store and represent all these as part of the underlying structures - which makes all of this difficult to manage. That's why, for AI workloads, we need a purpose-built database (or brain) designed for highly scalable access and specifically built for storing and accessing these vector embeddings.

Vector databases like DataStax Astra DB (built on Apache Cassandra) are designed to provide optimized storage and data access capabilities specifically for embeddings. A vector database is a type of database that is specifically designed to store and query high-dimensional vectors. Vectors are mathematical representations of objects or data points in a multi-dimensional space, where each dimension corresponds to a specific feature or attribute.

One of the primary values a database brings to application development is organizing and categorizing data efficiently for us. Vector databases are at the foundation of building generative AI applications because they enable vector search capabilities.

When machine learning was in its infancy, the data used by LLMs was typically small and finite. However, as generative AI has become mainstream, the amount of data used to train and augment learning has grown exponentially.

This is why vector databases are so important. They simplify fundamental operations for generative AI apps by storing large volumes of data in the structure that generative AI applications need for optimized operations.

There are many other benefits vector databases provide. The key benefits are storing, retrieving, and interacting naturally with the large datasets that generative AI applications need.

For generative AI to function, it needs a brain to efficiently access all the embeddings in real time. It uses this to formulate insights, perform complex data analysis, and make generative predictions of what is being asked.

Think about how you process information and memories. One prominent technique is comparing memories to past events. For example, we know not to stick our hand into boiling water because we have, at some point in the past, been burned. We know not to eat a specific food because we remember how it affected us.

This is how vector databases work. They align data (memories) for fast mathematical comparison so that generic AI models can find the most likely result. LLMs like ChatGPT, for example, need to compare what logically completes a thought or sentence by quickly and efficiently comparing all the different options it has for a given query. The result must be highly accurate and responsive.

The challenge is that generative AI can't do this with traditional scalar and relational approaches. They're too slow, rigid, and narrowly focused.

Generative AI needs a database built to store the mathematical representation its brain is designed to process. It also needs to offer extreme performance, scalability, and adaptability to make the most of all the data it has available. In other words, it needs something designed to be more like the human brain, with the ability to store memory engrams and rapidly access, correlate, and process them on demand.

With a vector database, we can rapidly load and store events as embeddings and use our vector database as the brain that powers our AI models. This provides us contextual information, long-term memory retrieval, semantically-like data correlation, and much, much more.

To enable efficient similarity search, vector databases employ specialized indexing structures and algorithms, such as tree-based structures (e.g., k-d trees), graph-based structures (e.g., k-nearest neighbor graphs), or hashing techniques (e.g., locality-sensitive hashing). These indexing methods help organize and partition the vectors in a way that facilitates fast retrieval of similar vectors.

In a vector database, the vectors are typically stored along with their associated metadata, such as labels, identifiers, or any other relevant information. The database is optimized for efficient storage, retrieval, and querying of vectors based on their similarity or distance to other vectors.

There are three steps to how a vector database works:

One of the questions that gets asked a lot is, "Do I even need a vector database?"

For small workloads, you probably don’t. However, if you're looking to leverage your data across applications and build generative AI applications that meet different use cases, having a common repository for storage and retrieval of information for those application use cases provides a more seamless interaction with a data-first approach.

One of the most common use cases for a vector database is in providing similarity or semantic search capabilities. This provides a native ability to encode, store, and retrieve information based on how it relates to the data around it.

By using a vector database to store your corpus of data, applications can see how the data relates to other data. They can also see how it's semantically similar or different from all the other data within the system. Compared to traditional approaches that use keywords or key-value pairs, a vector database compares information based on high-dimensional vectors, providing 1000s of additional points of comparison.

Storing your data in a vector database also provides extensibility to machine learning or deep learning applications. Probably the most common implementation is chatbots that use natural language processing to provide a more natural interaction.

From customer information to product documentation, leveraging a vector database to store all of the relevant information provides machine learning applications with the ability to store, organize, and retrieve information from transferred learning. This enables more efficient fine-tuning of pre-trained models.

Leveraging a vector database for generative AI applications and large language models (LLMs), like other use cases, provides the foundation by providing storage and retrieval of large corpuses of data for semantic search. Beyond that however, leveraging a vector database provides for content expansion allowing for LLMs to grow beyond the original pre-trained data.

In addition, a vector database also provides dynamic content retrieval. You can also incorporate multimodal approaches, where applications can leverage text, image, and video modalities for increased engagement.

While recommendation engines have been mainstream for a significant time, leveraging a vector database provides exponentially more paths for making recommendations.

Previous models used keyword and relational semantics. With a vector database, your app can make recommendations on high-dimensional semantic relationships, leveraging hierarchical nearest neighbor searching to provide the most relevant and timely information.

The use cases for vector databases are pretty vast. Much of that comes down to the vector database being the next evolution in how we store and retrieve data.

One reason vector databases are important is that they enhance the capabilities of generative AI models when used at the core of a retrieval augmented generation architecture.

Retrieval-augmented generation (RAG) architectures provide generative AI applications with the extended ability to not only generate new content, but to use the storage and retrieval systems to incorporate contextual information from pre-existing datasets. Generative AI applications only get better with more information. As a result, pre-existing datasets tend to be fairly large in scale and distributed across multiple different applications/locations.

This is where a vector database becomes an essential asset in implementing RAG. It simplifies multiple RAG-related tasks:

A traditional database stores multiple standard data types like strings, numbers, and other scalar data types in rows and columns. You query rows in the database using either indexes or key-value pairs that are looking for exact matches. The query returns the relevant rows for those queries.

By contrast, a vector database introduces a new data type, a vector. It builds optimizations around this data type to enable fast storage, retrieval, and nearest neighbor search semantics.

Traditional relational databases were optimized to provide vertical scalability around structure data. Traditional NoSQL databases were built to provide horizontal scalability for unstructured data. Solutions like Apache Cassandra, have been built to provide optimizations around both structured and unstructured data, with the addition of features to store vector embeddings. That makes solutions like DataStax Astra DB ideally suited for traditional and AI-based storage models.

One of the biggest differences with a vector database is that traditional models have been designed to provide exact results. Vector databases store data as a series of floating point numbers. Searching and matching data doesn’t have to be an exact match; it can instead find the most similar results to our query.

Vector databases use different algorithms that all participate in Approximate Nearest Neighbor (ANN) search, retrieving large volumes of related information to quickly and efficiently. This is where a purpose-built vector database, like DataStax Astra DB, provides significant advantages for generative AI applications. Traditional databases simply can’t scale to the amount of high-dimensional data that AI requires. AI applications need the ability to store, retrieve, and query closely-related data in a highly distributed, highly flexible solution.

Vector databases offer several key benefits that make them highly valuable in various gen-AI applications, especially those involving complex and large-scale data analysis. Here are some of the primary advantages:

Vector databases are specifically designed to manage high-dimensional data efficiently. Traditional databases often struggle with the complexity and size of these datasets. Vector databases excel in storing, processing, and retrieving data from high-dimensional spaces without significant performance degradation.

One of the most significant advantages of vector databases is their ability to perform similarity and semantic searches. They can quickly find data points most similar to a given query. That's crucial for applications like recommendation engines, image recognition, and natural language processing.

Vector databases must be highly scalable, capable of handling massive datasets without a loss in performance. This scalability is essential for businesses and applications that generate and process large volumes of data regularly.

Vector databases offer faster query responses compared to traditional databases, especially when dealing with complex queries in large datasets. This speed doesn't come at the cost of accuracy, as vector databases can provide highly relevant results due to their advanced algorithms. For more information on speed and accuracy, please see GigaOm's report on vector database performance comparisons.

Vector databases are particularly well-suited for AI and machine learning applications. They can store and process the data necessary for training and running machine learning models, particularly in fields like deep learning and natural language processing.

By enabling complex data modeling and analysis, vector databases allow organizations to gain deeper insights from their data. This capability is crucial for data-driven decision-making and predictive analytics.

These databases support the development of personalized user experiences by analyzing user behavior and preferences. This is particularly useful in marketing, e-commerce, and content delivery platforms where personalization can significantly enhance user engagement and satisfaction.

One of the biggest benefits vector databases bring to AI is the ability to leverage existing models across large datasets by enabling efficient access and retrieval of data for real-time operations. A vector database provides the foundation for the same type of memory recall we use in our organic brains.

With a vector database, artificial intelligence is broken into cognitive functions (large language models), memory recall (vector databases), specialized memory engrams and encodings (vector embeddings), and neurological pathways (data pipelines).

Working together, these processes enable artificial intelligence to learn, grow and access information seamlessly.

The vector database holds all of the memory engrams. It provides cognitive functions with the ability to recall information that triggers similar experiences. This is similar to how, when an event occurs, our human memory recalls other events that invoke the same feelings of joy, sadness, fear, or hope.

With a vector database, generative AI processes can access large sets of data. They correlate that data in a highly efficient way and use it to make contextual decisions on what comes next.

When tapped into a nervous system - i.e., data pipelines - this model enables storing and accessing new memories as they're made. This gives AI models the power to learn and grow adaptively by tapping into workflows that provide history, analytics, or real-time information.

Whether you're building a recommendation system, an image processing system, or anomaly detection, you need a highly efficient, optimized vector database like Astra DB at the core. Astra DB is designed and built to power the cognitive process of AI that can stream data as data pipelines from multiple sources, like Astra Streaming. It leverages those to grow and learn, providing faster, more efficient results over time.

With the rapid growth and acceleration of generative AI across all industries, we need a purpose-built way to store the massive amount of data used to drive contextual decision-making.

Vector databases have been purpose-built for this task, providing a specialized solution for managing vector embeddings for AI usage. This is where the true power of a vector database derives: in contextualizing data, both at rest and in motion, to provide the core memory recall for AI processing.

While this may sound complex, vector search on Astra DB takes care of all of this for you with a fully integrated solution that provides all of the pieces you need. It provides a nervous system for retrieval, access, and processing of vector embeddings in an easy-to-use cloud platform. Try for free today.

A vector database is a database designed to store and query high-dimensional vectors, aiding in AI processes by enabling vector search capabilities.

Vector databases facilitate real-time access to vector embeddings, enabling efficient data comparison and retrieval for generative AI models. By organizing vectors in a way that facilitates fast retrieval of similar vectors, they support AI in generating insights and predictions.

They provide optimized storage and retrieval of vector embeddings, boosting AI functionalities like recommendation systems and anomaly detection. For instance, they can power recommendation engines that suggest content based on users' queries and preferences.

Unlike traditional databases, vector databases store data in a mathematical representation called vectors, enabling approximate nearest neighbor (ANN) search for similar results. This feature enhances search and matching operations, making them more suited for AI applications.

Some of the main advantages are that they enable fast storage, retrieval, and nearest neighbor search semantics, which is especially beneficial for generative AI applications. Their structure allows for a broader scope in result retrieval compared to exact match queries in traditional databases.

They use specialized structures like k-d trees, k-nearest neighbor graphs, or locality-sensitive hashing for efficient similarity search.