How to Web Scrape on a Schedule with Apache Cassandra, FastAPI, and Python

In this tutorial you’ll learn how to web scrape on a schedule by integrating the Python framework called FastAPI with Astra DB.

Recently, I caught up with the Pythonic YouTuber Justin Mitchell from CodingEntrepreneurs. We discussed how today’s apps are tackling global markets and issues. He pointed out that Discord could store 120 million messages with only four backend engineers back in 2017.

While scale is certainly not the only consideration for global applications, it’s one of the most difficult issues to tackle. It often implies costly rewrites or re-architectures.

Today, Discord stores billions of messages a day with a minimal team. Justin and I are both curious: what makes it possible? As Discord writes on their blog:

“This is a lot of data that is ever increasing in velocity, size, and must remain available. How do we do it? Cassandra!”

As developers, we know that scalability on the app tier is just as important as the data tier. In the past decade, application frameworks and libraries have massively improved to tackle distributed applications. But what about distributed data?

Apache Cassandra® is a NoSQL and open-source database built for astounding amounts of data. It’s used by global enterprises like Netflix, Apple, and Facebook. But can there be other use cases for Cassandra beyond messaging and chat? I asked Justin from CodingEntrepreneurs if he thought web scraping – extracting data from websites – was an interesting use case. He did.

In this video tutorial on the Coding for Entrepreneurs YouTube Channel, Justin shows you how to web scrape on a schedule. He integrates the Python framework called FastAPI with DataStax Astra DB, the serverless, managed Database-as-a-Service built on Cassandra by DataStax.

This tutorial build uses the following technologies:

- Astra DB: managed Cassandra database service

- FastAPI: web framework for developing RestAPIs in Python based on pydantic

- Python: interpreted programming language

- pydantic: library for data parsing and validation

- Celery: open-source asynchronous task queue based on distributed message passing

- Redis: open-source, in-memory data store used as a message broker

We begin our video tutorial with a quick demo on implementing our own scraping client. Then we looked at methods to scrape a dataset from Amazon.com on Jupyter Notebook.

You can see how to grab all the raw data, put it in a dictionary, validate it, and store it in a database. Amazingly, when FastAPI starts, it syncs all the database tables coming from AstraDB automatically. This makes web scraping really simple, easy, and fast.

What you need to web scrape on a schedule

We’ll use Python to perform web scraping. Since we’re on a quick schedule, we’ll get massive amounts of data quickly, making it perfect for Cassandra. We will use Astra DB to automatically connect to Cassandra, which gives us up to 80 gigabytes for free. Start by signing up for an Astra DB account.

If you’re not familiar with Python, we recommend that you get some experience by completing the programming challenge 30 days of Python. If you have basic knowledge in classes, functions, and strings, you are good to go.

You’ll find the tools you need on the Coding for Entrepreneurs Github. Don’t forget to install Python 3.9 and download Visual Studio Code as your code and text editor. Then we’re ready to set up our environment. Follow our YouTube tutorial for the following steps.

Integrating Python with Cassandra

Once you’ve signed up for your free account on Astra DB, we’ll create a FastAPI database on Astra DB with the key space name, provider and region closest to you physically. While we wait for the database, we’ll add a new token to the application. We walk through step-by-step in the video tutorial.

When the FastAPI database is active, we’ll connect it using a Cassandra driver downloaded from Python. After following all the steps, we should have a fully connected database between Cassandra and Python.

Creating data with your first Cassandra model

If you are following along with the video, you should now have connected to a session. You can store data in your Cassandra database. Since we’ll be scraping Amazon, we want to store the Amazon Standard Identification Number (ASIN) – a unique identifier for each product – and a product title. If we have a million of these, it won’t be efficient in Python. But it will be very efficient in Cassandra. The ASIN is the primary key to look up products in our database.

Once we’re storing data in the model, we’ll set and register the default connection. The idea here is to store the product itself and its details. When you use the primary key, it’ll update other fields automatically.

One of my favorite things about Cassandra is that you can easily add a new column to the existing model. No need to run migrations or make changes to the actual tables. Follow along the coding to add a new column to an existing model here.

Tracking a Scrape Event

The next part is to track an actual scrape event. With the create_scrape_entry command, we are essentially adding new items to our database. The current model is going to update based on the ASIN you’ve stored. If the ASIN exists in the database, it’ll update all other fields. If it doesn’t, we can train Cassandra to always add the updates as the default by creating a different table and setting an UUID field as our primary key. As long as there is a UUID, you can have a consistent data update.

Using Jupyter with Cassandra Models

When we write everything in the Python shell, it’s easy to forget what we wrote or for things to get lost in context. Jupyter Notebook prevents us from having to exit out of the shell and rerun it all. Even if you haven’t used it before, you’ll find it very user-friendly as long as you’re familiar with Python.

Jupyter allows rapid iteration and testing. This makes it extremely useful for our Cassandra model and to scrape web pages. On our GitHub you can find other methods apart from Jupyter that you can use for every model.

Validating Data and Implementing FastAPI

Validating our data creates a more robust FastAPI when we start implementing it. pydantic is the best tool for this in any given response or view – especially if you are converting an old project running on FastAPI to use a Cassandra database.

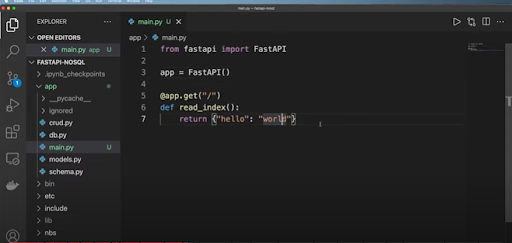

Figure 1. FastAPI application.

If there’s a data error, pydantic has a neat feature that automatically returns a validation error and gets rid of unnecessary data. You can also change your requirements easily on pydantic without having to update your Cassandra database.

Convert Cassandra UUID to pydantic datetime strv

There’s one critical flaw to the event scraping process. It’s missing the actual time an event occurred. You can find a time element in the UUID field that you put in the Cassandra model, or the time UUID field in the Cassandra driver.

But let’s go ahead and parse this UUID field into an actual date and time object. I created a gist specifically for this challenge. You can find it on our GitHub. Just grab the values over to your schema and create a timestamp if you need to by following our lead in the video tutorial.

Now, you’re ready to scrape data from your local server and send it to your FastAPI project. This will then send it to your Astra DB. Now you’ve pretty much built a foundation in terms of storing your data. If you want to add to your database in the future, you can just add extra fields to your model and update your schema accordingly.

Implementing Celery to Delay and Schedule Tasks

Once you have a foundation, you want to create a worker process that is going to run on a regular basis. You’d normally look at a function and call it when you want to execute that function. But what if you want the function to be fully executed in the future or for it to run on a specific day at a specific time?

You can use Celery to both delay tasks from running and schedule those tasks periodically. You can have a huge cluster of computers running these tasks for you. So you don’t need to use your main web application computer or the current one you’re on, which is amazing.

Celery relies on communicating through messages or a broker like Redis — a big value store with a bunch of keys. Redis runs these keys. Celery inserts and deletes keys on its own automatically. Find a complete guide on how to set up Redis on our GitHub.

Once you have set up Redis, you can follow the instructions in our video to:

- Configure environment variables

- Implement a Celery application as a worker process to delay tasks

- Integrate Cassandra Driver with Celery

- Run periodic tests on Celery

Scraping with Selenium

Now you know how to delay and schedule tasks. Let’s get to the web scraping process using Selenium and Chrome Driver. We’re using Selenium particularly to emulate an actual browser. If it’s not installed on your system or you have issues with it, feel free to use Google Colab or any kind of cloud service using Notebooks.

- Set up Selenium and Chrome driver data

- Execute JavaScript on Selenium to allow endless scrolling

- Scrape events with Selenium

Once you’re done with the scraping, remember to use the end-point from our FastAPI along with ngrok to ingest the data.

Implement our scrape client parser

It’s time to parse out our actual HTML string using a request-html. You could use BeautifulSoup or even the Selenium WebDriver instead, but I found request-html to be easier. Next, implement the Scrape Client Parser, by adding a system right on the client scraper to handle all of the scraping and parsing.

- Parse your data using requests-html

- Set up Scrape Client Parser

Then put the validated data, Celery worker process and Scrape Client Parser, all together in the Cassandra database to return a massive dataset.

Conclusion

In this tutorial, you have learned how to:

- Integrate Astra DB with Python, FastAPI, and Celery

- Set up and configure Astra DB

- Use Jupyter with Cassandra models

- Schedule and offload tasks with Celery

- Web scrape on a schedule

- Use Selenium and requests-html to extract & parse data

If we’re scraping data for thousands of products, our data set is going to balloon up and become massive. Astra DB can handle any amount of data. This allows you to track price changes, observe trends or historical patterns, and really get into the magic of big data. Plus, it’s really easy to implement everything on Astra DB.

To learn more about Cassandra or Astra DB, check out DataStax’s complete course on Cassandra and the tutorials on our YouTube channel. Feel free to join the DataStax community and keep a look out for Cassandra developer workshops near you.

Resources

- Astra DB: Multi-cloud Database-as-a-service Built on Cassandra

- Astra DB Sign Up Link

- What is FastAPI?

- 30-Days of Python

- YouTube Tutorial: Python, NoSQL & FastAPI Tutorial: Web Scraping on a Schedule

- Coding for Entrepreneurs YouTube Channel

- Coding for Entrepreneurs Github

- DataStax Academy: Apache Cassandra Course

- DataStax YouTube Channel

- DataStax Community

- DataStax Cassandra Developer Workshops