Integrating RAG Search into a Notion Clone with Mongoose and Astra DB

Editor’s note: Mongoose is a powerful and flexible ODM (Object-Document Mapping) library originally designed for MongoDB; now it’s compatible with Astra DB. We’re excited to share a second post from Valeri Karpov, the creator of Mongoose, as he demonstrates the power of RAG and Astra DB when building an open-source clone of Notion, a productivity and note-taking app.

In the previous blog post in this series, you read about how to convert notion-clone, a simplified open-source clone of Notion, to store data in Astra DB. One of the major benefits of Astra DB is powerful vector search that fits neatly into Mongoose's query syntax. In this post, you'll learn how to extend notion-clone to use vector search for retrieval-augmented generation (RAG), enabling the LLM to answer questions based on notes you've entered into notion-clone. Here's the full source code on GitHub in case you want to just dive into the code, or try out a live example on Vercel.

Project overview

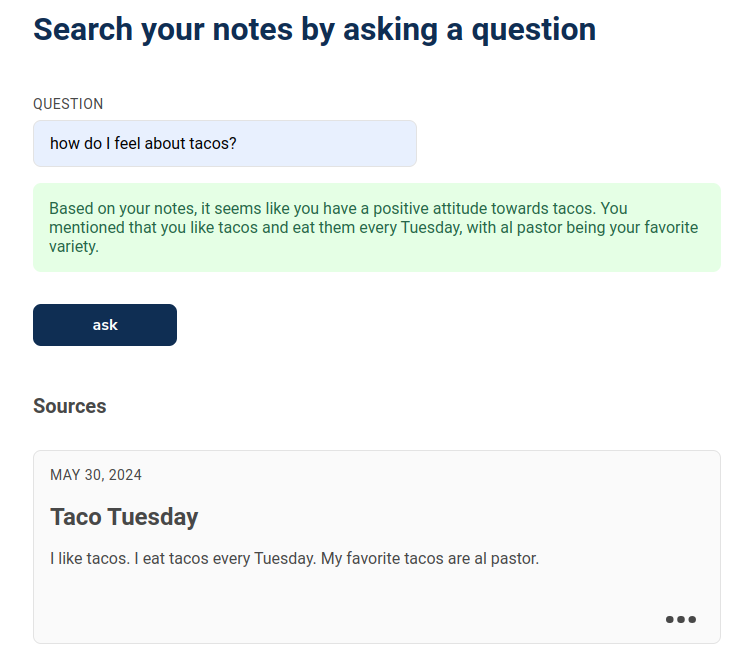

RAG means using a vector database to find content that is relevant to the user's prompt, to help guide the LLM to a better result. RAG can be especially powerful with a note-taking application, because the user's notes will enable the LLM to answer questions personalized to the user's data. For example, below is a screenshot of what the notion-clone "Ask the AI" page will look like.

The user can ask any question. The backend will then load the most relevant notes to the user's question using vector search, and attach those notes to a prompt to OpenAI's ChatGPT API. The frontend will then display ChatGPT's response, along with the notes used to answer the question.

Getting started

In order to support RAG on notion-clone's Page documents, you'll need to implement two changes to notion-clone's backend:

- You need to add a

$vectorproperty onNotedocuments that contains the embeddings for the note's contents. - You need to add an

/answer-questionendpoint that will generate embeddings based on the user's prompt, and search for the most relevant Note documents based on embeddings similarity.

The $vector property can be implemented using Mongoose save() middleware: when a new document is created or an existing document is modified, Mongoose will trigger a save() hook that will update the document's $vector property. But first, notion-clone stores Page document content as HTML. To ensure the embeddings are as accurate as possible, you should convert the HTML to plain text using the node-html-parser package. Finally, you can represent the Page document's text content as a Mongoose virtual to ensure the textContent property is automatically computed whenever it is accessed.

const parser = require("node-html-parser");

pageSchema.virtual('textContent').get(function() {

let text = "";

for (let i = 0; i < this.blocks.length; i++) {

if (this.blocks[i].html) {

// Page documents store an array of `blocks` that contain HTML. Convert

// all non-empty blocks to plain text

const blockText = parser.parse(this.blocks[i].html).textContent;

if (!blockText) {

continue;

}

text += `${blockText}\n`;

}

};

// Return the concatenated text content from all the `blocks`

return text;

});The Mongoose save() middleware that updates the $vector property based on the textContent property's embedding looks like the following. Every time a document is saved (created or updated), make a request to OpenAI's embeddings API with the document's textContent and save the result in $vector.

const axios = require("axios");

pageSchema.pre("save", async function() {

this.$vector = undefined;

const text = this.textContent;

if (text) {

const $vector = await axios({

method: "POST",

url: "https://api.openai.com/v1/embeddings",

headers: {

'Content-Type': "application/json",

Authorization: `Bearer ${process.env.OPENAI_API_KEY}`

},

data: {

model: "text-embedding-ada-002",

input: text

}

}).then(res => res.data.data[0].embedding);

this.$vector = $vector;

}

});Integrating vector search

Now that Page documents have a $vector property, you can sort Page documents based on distance from a given vector using Astra DB vector search. Given a user prompt in req.body.question, the following finds the three Page documents that are closest to the given prompt in terms of vector similarity.

const answerQuestion = async (req) => {

isAuth(req);

const userId = req.userId;

// Get the embedding for the given question

const embedding = await createEmbedding(req.body.question);

// Find the 3 closest pages to `embedding`

const pages = await Page

.find({ creator: userId })

.limit(3)

.sort({ $vector: { $meta: embedding } });

// ...

}Once you have the three closest pages, you can provide the text content from those pages, along with a prompt, to OpenAI's completions API as follows.

const axios = require("axios");

const answerQuestion = async (req) => {

isAuth(req);

const userId = req.userId;

// Get the embedding for the given question

const embedding = await createEmbedding(req.body.question);

// Find the 3 closest pages to `embedding`

const pages = await Page

.find({ creator: userId })

.limit(3)

.sort({ $vector: { $meta: embedding } });

// Construct a prompt based on the user's question and the 3 closest pages

const prompt = `You are a helpful assistant that summarizes relevant notes to help answer a user's questions.

Given the following notes, answer the user's question.

${pages.map(page => 'Note: ' + page.textContent).join('\n\n')}

`.trim();

const answers = await makeChatGPTRequest(prompt, req.body.question);

// Return OpenAI's answer, and the sources so the user can see where the answer came from

return {

sources: pages,

answer: answers

};

};

async function makeChatGPTRequest(systemPrompt, question) {

const options = {

method: "POST",

url: "https://api.openai.com/v1/chat/completions",

headers: {

"Content-Type": "application/json",

Authorization: `Bearer ${process.env.OPENAI_API_KEY}`

},

data: {

model: "gpt-3.5-turbo-1106",

messages: [

{ role: "system", content: systemPrompt },

{ role: "user", content: question }

]

}

};

return axios(options).then(res => res.data.choices?.[0]?.message?.content ?? 'No answer received');

}In the notion-clone AI page implementation, we also added rate limiting support so we could deploy a preview to production with reduced risk of abuse. The rate limiting limits requests to OpenAI to 250 per hour across all users.

Frontend layout

The frontend layout is a Next.js form that makes an API request to the /answer-question endpoint using the following function:

const handleSubmit = async (e) => {

e.preventDefault();

setNotice(RESET_NOTICE);

try {

// Make an API request to the `/answer-question` endpoint

const response = await fetch(

`/api/answer-question`,

{

method: "POST",

headers: {

"Content-Type": "application/json"

},

body: JSON.stringify({

question: formData.question

}),

}

);

const data = await response.json();

if (response.status >= 300) {

// If error, display the error message

setNotice({ type: "ERROR", message: data.message });

} else {

// If successful, display the result

setNotice({ type: 'SUCCESS', message: data.answer, sources: data.sources });

}

} catch (err) {

console.log(err);

setNotice({ type: "ERROR", message: "Something unexpected happened." });

dispatch({ type: "LOGOUT" });

}

};The frontend layout is represented as an askQuestionPage component. The askQuestionPage component is a controlled form with a "question" input and a submit button. Underneath the form, there's a "notice": that's the green box that contains the result of the form submission. Finally, if there's sources, the askQuestionPage renders a Card element for each source. The Card element is notion-clone's list-friendly representation of a Page.

const form = {

id: "question-form",

inputs: [

{

id: "question",

type: "text",

label: "Question",

required: true,

value: "",

},

],

submitButton: {

type: "submit",

label: "ask",

},

};

const askQuestionPage = () => {

const RESET_NOTICE = { type: "", message: "" };

const [notice, setNotice] = useState(RESET_NOTICE);

const handleInputChange = (id, value) => {

setFormData({ ...formData, [id]: value });

};

const values = {};

form.inputs.forEach((input) => (values[input.id] = input.value));

const [formData, setFormData] = useState(values);

return (

<>

<h1 className="pageHeading">Search your notes by asking a question</h1>

<form id={form.id} onSubmit={handleSubmit}>

{form.inputs.map((input, key) => {

return (

<Input

key={key}

formId={form.id}

id={input.id}

type={input.type}

label={input.label}

required={input.required}

value={formData[input.id]}

setValue={(value) => handleInputChange(input.id, value)}

/>

);

})}

{notice.message && (

<Notice status={notice.type} mini>

{notice.message}

</Notice>

)}

<button type={form.submitButton.type}>{form.submitButton.label}</button>

</form>

<div>

{notice.sources && (<h3>Sources</h3>)}

{notice.sources && notice.sources.map((page, key) => {

const updatedAtDate = new Date(Date.parse(page.updatedAt));

const pageId = page._id;

const blocks = page.blocks;

return (

<Card

key={key}

pageId={pageId}

date={updatedAtDate}

content={blocks}

deleteCard={(pageId) => deleteCard(pageId)}

/>

);

})}

</div>

</>

);

};Moving on

RAG is a powerful tool for summarizing arbitrary content. Normally, LLMs are limited to the information they were trained on. Using a user's notes, you can build a tool that answers the user's questions based on their own personalized information.

The combination of Astra DB and Mongoose provides a neat, simple vector search integration as part of a sophisticated database framework that includes data validation and middleware. Mongoose middleware, in particular, makes it much easier to plug vector search into an existing codebase. Whether you're just learning about vector search or already making use of vector search in production, you should try Mongoose with Astra DB.

This post originally appeared here.