Data preparation is the most notorious time sink for any developer building a RAG (retrieval augmented generation) or generative AI app. Why? Enterprise data can come in a huge variety of difficult-to-use plain text, image, and document types, including HTML, PDF, CSV, PNG, PPTX, and more.

Fortunately for developers there is Unstructured.io, a no-code platform and cloud service that not only helps you convert just about any document, file type, or layout into LLM-ready data, but also stands up your GenAI data pipeline from transformation and cleaning to generating embeddings for a vector database. This is why we are super excited about our new integration between Unstructured.io and Datastax Astra DB: it enables you to quickly convert the most common document types into vector data for highly relevant GenAI similarity searches.

Here, we’ll show Unstructured.io’s capabilities in processing unstructured data. We’ll build a simple but elegant RAG pipeline powered by an integration with Astra DB that takes a variety of data formats and some simple Python code to build an LLM-based query engine that retrieves parsed data to provide insights to users.

To do this we’ll use an Astra DB integration to Unstructured.io as a vector store destination.

Getting started

Unstructured provides a simple but incredibly powerful developer function partition(), which takes over a dozen different document types as input including PDFs, HTML, CSVs, and more, and then returns plain text suitable for indexing into Astra DB and other vector stores.

Only a file path is required; Unstructured.io handles the rest. The Python library has a number of optional requirements to support the parsing of the various formats, but if you want it to handle everything, you simply need to run:

pip install "unstructured[all-docs]"We now have the ability to parse documents using unstructured. In addition, we need to add support for the Astra DB Destination Connector by installing the appropriate library. A destination connector is essentially a vector store or some other persistent or in-memory store that houses embeddings and associated data that has been parsed from the input documents. Astra’s low latency and high performanceApache Cassandra-based vector database is an optimal choice. We install this, along with an embeddings model for LlamaIndex which we will use later, with:

pip install "unstructured[astra]"

pip install llama-index-embeddings-huggingface

Now we're ready to set up our RAG pipeline, using Unstructured.io powered by Astra DB.

Document parsing

As mentioned previously, Unstructured supports over a dozen document formats, everything from PDFs to images to CSV files. Our first step in the process is taking a file or set of files and producing a parsed text-based document. Let’s use the Astra DB Pricing Page as an example:

from unstructured.partition.html import partition_html

url = "https://www.datastax.com/pricing/astra-db"

elements = partition_html(url=url)

print("\n\n".join([str(el) for el in elements]))By simply providing the web URL, we can parse the HTML page into text suitable for storing into Astra DB. Of course, there is a wide array of other document formats available, but for the sake of illustration, we’ll use this one specifically. Now, we use the Astra DB integration to index our documents, generate embeddings, and perform RAG-type operations.

Astra DB integration

Using Unstructured’s parsing, we can build an end-to-end pipeline going directly from a web URL (or a set of web URLs), to an Astra DB collection suitable for RAG applications. Take a look!

import os

from dotenv import load_dotenv

from unstructured.ingest.runner.writers.base_writer import Writer

from unstructured.ingest.runner.writers.astra import AstraWriter

from unstructured.partition.html import partition_html

load_dotenv()

url = "https://www.datastax.com/pricing/astra-db"

elements = partition_html(url=url)

if not os.path.exists("local-input-to-astra"):

os.makedirs("local-input-to-astra")

for elem in elements:

# Write the text to local txt files

with open(f"local-input-to-astra/{elem.id}.txt", "w") as f:

f.write(elem.text)

from unstructured.ingest.connector.local import SimpleLocalConfig

from unstructured.ingest.connector.astra import (

AstraAccessConfig,

AstraWriteConfig,

SimpleAstraConfig,

)

from unstructured.ingest.interfaces import (

ChunkingConfig,

EmbeddingConfig,

PartitionConfig,

ProcessorConfig,

ReadConfig,

)

from unstructured.ingest.runner import LocalRunner

from unstructured.ingest.runner.writers.base_writer import Writer

from unstructured.ingest.runner.writers.astra import (

AstraWriter,

)

def get_writer() -> Writer:

return AstraWriter(

connector_config=SimpleAstraConfig(

access_config=AstraAccessConfig(

api_endpoint=os.getenv("ASTRA_DB_API_ENDPOINT"),

token=os.getenv("ASTRA_DB_APPLICATION_TOKEN"),

),

collection_name=os.getenv("ASTRA_DB_COLLECTION_NAME", "unstructured"),

embedding_dimension=os.getenv("ASTRA_DB_EMBEDDING_DIMENSION", 384),

),

write_config=AstraWriteConfig(batch_size=20),

)

writer = get_writer()

runner = LocalRunner(

processor_config=ProcessorConfig(

verbose=True,

output_dir="local-output-to-astra",

num_processes=2,

),

connector_config=SimpleLocalConfig(

input_path="local-input-to-astra",

),

read_config=ReadConfig(),

partition_config=PartitionConfig(),

chunking_config=ChunkingConfig(chunk_elements=True),

embedding_config=EmbeddingConfig(

provider="langchain-huggingface",

),

writer=writer,

writer_kwargs={},

)

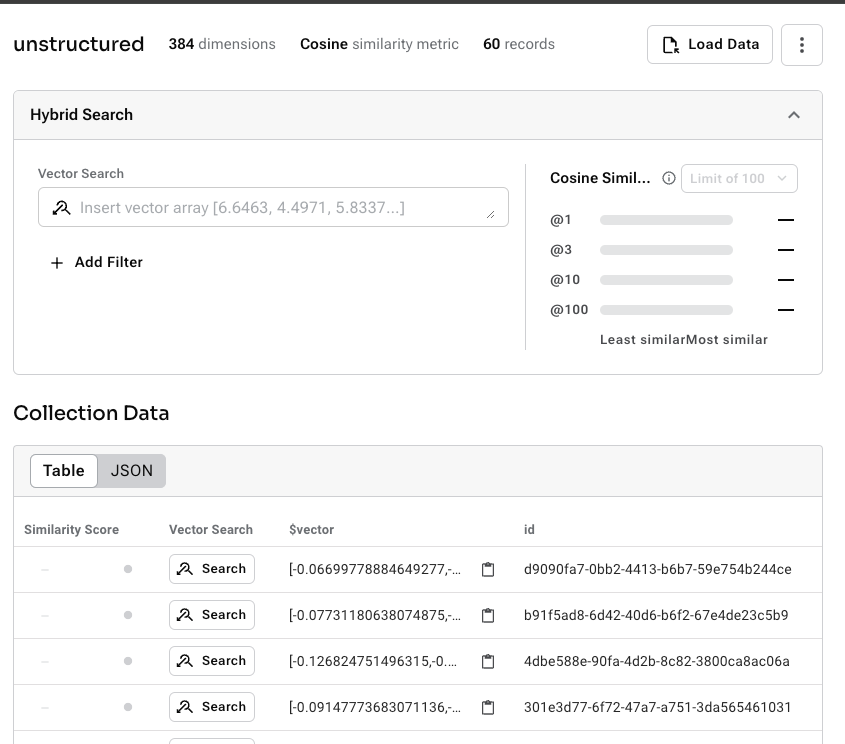

runner.run()The HTML document is automatically parsed into a set of chunks, each of which gets stored into Astra DB. This happens automatically, but is entirely configurable by the user throughout the various configuration options provided in the final code block. We now immediately have an Astra DB Collection, with embeddings generated, suitable for a simple RAG pipeline.

Querying the data store

We now can use any toolkit desired to perform queries against this vector store. In this example, we’ll use LlamaIndex to connect to the newly created store, and with OpenAI’s GPT-4 model, ask some questions about the page we indexed.

from llama_index.core import VectorStoreIndex

from llama_index.embeddings.huggingface import HuggingFaceEmbedding

from llama_index.vector_stores.astra import AstraDBVectorStore

astra_db_store = AstraDBVectorStore(

token=os.getenv("ASTRA_DB_APPLICATION_TOKEN"),

api_endpoint=os.getenv("ASTRA_DB_API_ENDPOINT"),

collection_name=os.getenv("ASTRA_DB_COLLECTION_NAME", "unstructured"),

embedding_dimension=os.getenv("ASTRA_DB_EMBEDDING_DIMENSION", 384),

)

index = VectorStoreIndex.from_vector_store(

vector_store=astra_db_store,

embed_model=HuggingFaceEmbedding(

model_name="BAAI/bge-small-en-v1.5"

)

)

query_engine = index.as_query_engine()

response = query_engine.query(

"how much is the astra db free tier?"

)

print(response.response)We immediately get back a response, which can vary at runtime, but accurately reflects the documentation:

The Astra DB free tier provides $25 monthly credit for the first three months, allowing users to explore the service without incurring costs during this initial period.

Conclusion

In this post, you’ve seen how to use Unstructured.io to take as input a webpage, seamlessly parse it into nodes with associated embeddings, store that data automatically into Astra DB, and then query it using a query engine such as LlamaIndex. There are countless unexplored options for configuring everything from the document parsing, to the embedding model, to the chunking strategy for the document, and everything in between. The key takeaway, however, is that by building a pipeline like this, we’ve now opened up the RAG and LLM world to a set of documents that are typically challenging to parse. Given the diversity of data that exists, we hope you see the power of Unstructured.io and Astra DB together!