Apache Cassandra Benchmarking: 4.0 Brings the Heat with New Garbage Collectors ZGC and Shenandoah

Apache Cassandra 4.0 beta is the first version that supports JDK 11 and onwards. Latency is an obvious concern for Apache Cassandra® users and big hopes have been put into ZGC, the new low latency garbage collector introduced in JDK 11. It reached GA in JDK 14, which made us eager to evaluate how good of a fit it would be for Apache Cassandra clusters. We also wanted to compare Apache Cassandra 3.11.6 performance against 4.0 and see if Shenandoah, RedHat’s garbage collector, should be considered for production use.

In this post, we will see that Cassandra 4.0 brings strong performance improvements on its own which are massively amplified by the availability of new garbage collectors: ZGC and especially Shenandoah.

Benchmarking methodology

The following benchmarks were conducted using tlp-cluster for provisioning and configuring Apache Cassandra clusters in AWS and using tlp-stress for load generation and metrics collection. All used tools are available as open source and benchmarks are easily reproducible for anyone with an AWS account.

Clusters were composed of 3 nodes using r3.2xlarge instances and a single stress node using a c3.2xlarge instance.

Default settings were used for Apache Cassandra, with the exception of GC and heap.

Cluster provisioning and configuration was done using the latest release of tlp-cluster. We recently added some helper scripts to automate cluster creation and also the installation of Reaper and Medusa.

After installing and configuring tlp-cluster according to the documentation, you’ll be able to recreate the clusters we used to run the benchmarks:

# 3.11.6 CMS JDK8

build_cluster.sh -n CMS_3-11-6_jdk8 -v 3.11.6 --heap=16 --gc=CMS -s 1 -i r3.2xlarge --jdk=8 --cores=8

# 3.11.6 G1 JDK8

build_cluster.sh -n G1_3-11-6_jdk8 -v 3.11.6 --heap=31 --gc=G1 -s 1 -i r3.2xlarge --jdk=8 --cores=8

# 4.0 CMS JDK11

build_cluster.sh -n CMS_4-0_jdk11 -v 4.0~alpha4 --heap=16 --gc=CMS -s 1 -i r3.2xlarge --jdk=11 --cores=8

# 4.0 G1 JDK14

build_cluster.sh -n G1_4-0_jdk14 -v 4.0~alpha4 --heap=31 --gc=G1 -s 1 -i r3.2xlarge --jdk=14 --cores=8

# 4.0 ZGC JDK11

build_cluster.sh -n ZGC_4-0_jdk11 -v 4.0~alpha4 --heap=31 --gc=ZGC -s 1 -i r3.2xlarge --jdk=11 --cores=8

# 4.0 ZGC JDK14

build_cluster.sh -n ZGC_4-0_jdk14 -v 4.0~alpha4 --heap=31 --gc=ZGC -s 1 -i r3.2xlarge --jdk=14 --cores=8

# 4.0 Shenandoah JDK11

build_cluster.sh -n Shenandoah_4-0_jdk11 -v 4.0~alpha4 --heap=31 --gc=Shenandoah -s 1 -i r3.2xlarge --jdk=11

Note: in order to conduct all benchmarks under similar conditions, a single set of EC2 instances was used throughout the tests.

An upgrade from Cassandra 3.11.6 to Cassandra 4.0~alpha4 was executed and JDKs were switched when appropriate using the following script:

#!/usr/bin/env bash

OLD=$1

NEW=$2

curl -sL https://github.com/shyiko/jabba/raw/master/install.sh | bash

. ~/.jabba/jabba.sh

jabba uninstall $OLD

jabba install $NEW

jabba alias default $NEW

sudo update-alternatives --install /usr/bin/java java ${JAVA_HOME%*/}/bin/java 20000

sudo update-alternatives --install /usr/bin/javac javac ${JAVA_HOME%*/}/bin/javac 20000

The following JDK values were used when invoking jabba:

- openjdk@1.11.0-2

- openjdk@1.14.0

- openjdk-shenandoah@1.8.0

- openjdk-shenandoah@1.11.0

OpenJDK 8 was installed using Ubuntu apt.

Here are the java -version outputs for the different JDKs that were used during the benchmarks:

jdk8openjdk version "1.8.0_252"

OpenJDK Runtime Environment (build 1.8.0_252-8u252-b09-1~18.04-b09)

OpenJDK 64-Bit Server VM (build 25.252-b09, mixed mode)

jdk8 with Shenandoahopenjdk version "1.8.0-builds.shipilev.net-openjdk-shenandoah-jdk8-b712-20200629"

OpenJDK Runtime Environment (build 1.8.0-builds.shipilev.net-openjdk-shenandoah-jdk8-b712-20200629-b712)

OpenJDK 64-Bit Server VM (build 25.71-b712, mixed mode)

jdk11openjdk version "11.0.2" 2019-01-15

OpenJDK Runtime Environment 18.9 (build 11.0.2+9)

OpenJDK 64-Bit Server VM 18.9 (build 11.0.2+9, mixed mode)

jdk11 with Shenandoahopenjdk version "11.0.8-testing" 2020-07-14

OpenJDK Runtime Environment (build 11.0.8-testing+0-builds.shipilev.net-openjdk-shenandoah-jdk11-b277-20200624)

OpenJDK 64-Bit Server VM (build 11.0.8-testing+0-builds.shipilev.net-openjdk-shenandoah-jdk11-b277-20200624, mixed mode)

jdk14openjdk version "14.0.1" 2020-04-14

OpenJDK Runtime Environment (build 14.0.1+7)

OpenJDK 64-Bit Server VM (build 14.0.1+7, mixed mode, sharing)

CMS

CMS (Concurrent Mark Sweep) is the current default garbage collector in Apache Cassandra. It was removed from JDK 14 so all tests were conducted with either JDK 8 or 11.

The following settings were used for CMS benchmarks:

-XX:+UseParNewGC

-XX:+UseConcMarkSweepGC

-XX:+CMSParallelRemarkEnabled

-XX:SurvivorRatio=8

-XX:MaxTenuringThreshold=1

-XX:CMSInitiatingOccupancyFraction=75

-XX:+UseCMSInitiatingOccupancyOnly

-XX:CMSWaitDuration=10000

-XX:+CMSParallelInitialMarkEnabled

-XX:+CMSEdenChunksRecordAlways

-XX:+CMSClassUnloadingEnabled

-XX:ParallelGCThreads=8

-XX:ConcGCThreads=8

-Xms16G

-Xmx16G

-Xmn8G

Note that the -XX:+UseParNewGC flag was removed from JDK 11 and is then implicit. Using this flag would prevent the JVM from starting up.

We’ll keep CMS at 16GB of max heap as it could otherwise lead to very long pauses on major collections.

G1

G1GC (Garbage-First Garbage Collector) is easier to configure than CMS as it resizes the young generation dynamically, but delivers better with large heaps (>=24GB). This explains why it hasn’t been promoted to be the default garbage collector. It also shows higher latencies than a tuned CMS, but provides better throughput.

The following settings were used for G1 benchmarks:

-XX:+UseG1GC

-XX:G1RSetUpdatingPauseTimePercent=5

-XX:MaxGCPauseMillis=300

-XX:InitiatingHeapOccupancyPercent=70

-XX:ParallelGCThreads=8

-XX:ConcGCThreads=8

-Xms31G

-Xmx31G

For 4.0 benchmarks, JDK 14 was used when running G1 tests. We’re using 31GB of heap size to benefit from compressed ordinary object pointers (oops) and have the largest number of addressable objects for the smallest heap size.

ZGC

ZGC (Z Garbage Collector) is the latest GC from the JDK, which focuses on providing low latency with stop-the-world pauses shorter than 10ms. It is also supposed to guarantee that the heap size has no impact on pause times, allowing it to scale up to 16TB of heap.If these expectations are met, it could remove the need to use off-heap storage and simplify some development aspects of Apache Cassandra.

The following settings were used for ZGC benchmarks:

-XX:+UnlockExperimentalVMOptions

-XX:+UseZGC

-XX:ConcGCThreads=8

-XX:ParallelGCThreads=8

-XX:+UseTransparentHugePages

-verbose:gc

-Xms31G

-Xmx31G

We needed to use the -XX:+UseTransparentHugePages as a workaround to avoid enabling large pages on Linux. While the official ZGC documentation states it could (possibly) generate latency spikes, the results didn’t seem to show such behavior. It could be worth running the throughput tests using large pages to see how it could affect the results.

Note that ZGC cannot use compressed oops and is not affected by the “32GB threshold”. We’re using 31GB of heap to use the same sizing as G1 and allow the system to have the same amount of free RAM.

Shenandoah

Shenandoah is a low latency garbage collector developed at RedHat. It is available as a backport in JDK 8 and 11, and is part of the mainline builds of the OpenJDK starting with Java 13. Like ZGC, Shenandoah is a mostly concurrent garbage collector which aims at making pause times not proportional to the heap size.

The following settings for Shenandoah benchmarks:

-XX:+UnlockExperimentalVMOptions

-XX:+UseShenandoahGC

-XX:ConcGCThreads=8

-XX:ParallelGCThreads=8

-XX:+UseTransparentHugePages

-Xms31G

-Xmx31G

Shenandoah should be able to use compressed oops and thus benefits from using heaps a little below 32GB.

Cassandra 4.0 JVM configuration

Cassandra version 4.0 ships with separate jvm.options files for Java 8 and Java 11. These are the files:

- conf/jvm-server.options

- conf/jvm8-server.options

- conf/jvm11-server.options

Upgrading to version 4.0 will work with an existing jvm.options file from version 3.11, so long as it is renamed to jvm-server.options and the jvm8-server.options and jvm11-server.options files are removed. This is not the recommended approach. The recommended approach is to re-apply the settings found in the previous jvm.options file to the new jvm-server.options and jvm8-server.options files. The Java specific option files are mostly related to the garbage collection flags. Once these two files are updated and in place, it then becomes easier to configure the jvm11-server.options file, and simpler to switch from JDK 8 to JDK 11.

Workloads

Benchmarks were done using 8 threads running with rate limiting and 80% writes/20% reads. tlp-stress uses asynchronous queries extensively, which can easily overwhelm Cassandra nodes with a limited number of stress threads. The load tests were conducted with each thread sending 50 concurrent queries at a time. The keyspace was created with a replication factor of 3 and all queries were executed at consistency level LOCAL_ONE.

All garbage collectors and Cassandra versions were tested with growing rates of 25k, 40k, 45k and 50k operations per second to evaluate their performance under different levels of pressure.

The following tlp-stress command was used:

tlp-stress run BasicTimeSeries -d 30m -p 100M -c 50 --pg sequence -t 8 -r 0.2 --rate <desired rate> --populate 200000

All workloads ran for 30 minutes, loading approximately 5 to 16 GB of data per node and allowing a reasonable compaction load.

Note: The purpose of this test is not to evaluate the maximum performance of Cassandra, which can be tuned in many ways for various workloads. Neither is it to fine tune the garbage collectors which all expose many knobs to improve their performance for specific workloads. These benchmarks attempt to provide a fair comparison of various garbage collectors using mostly default settings when the same load is generated in Cassandra.

Benchmarks results

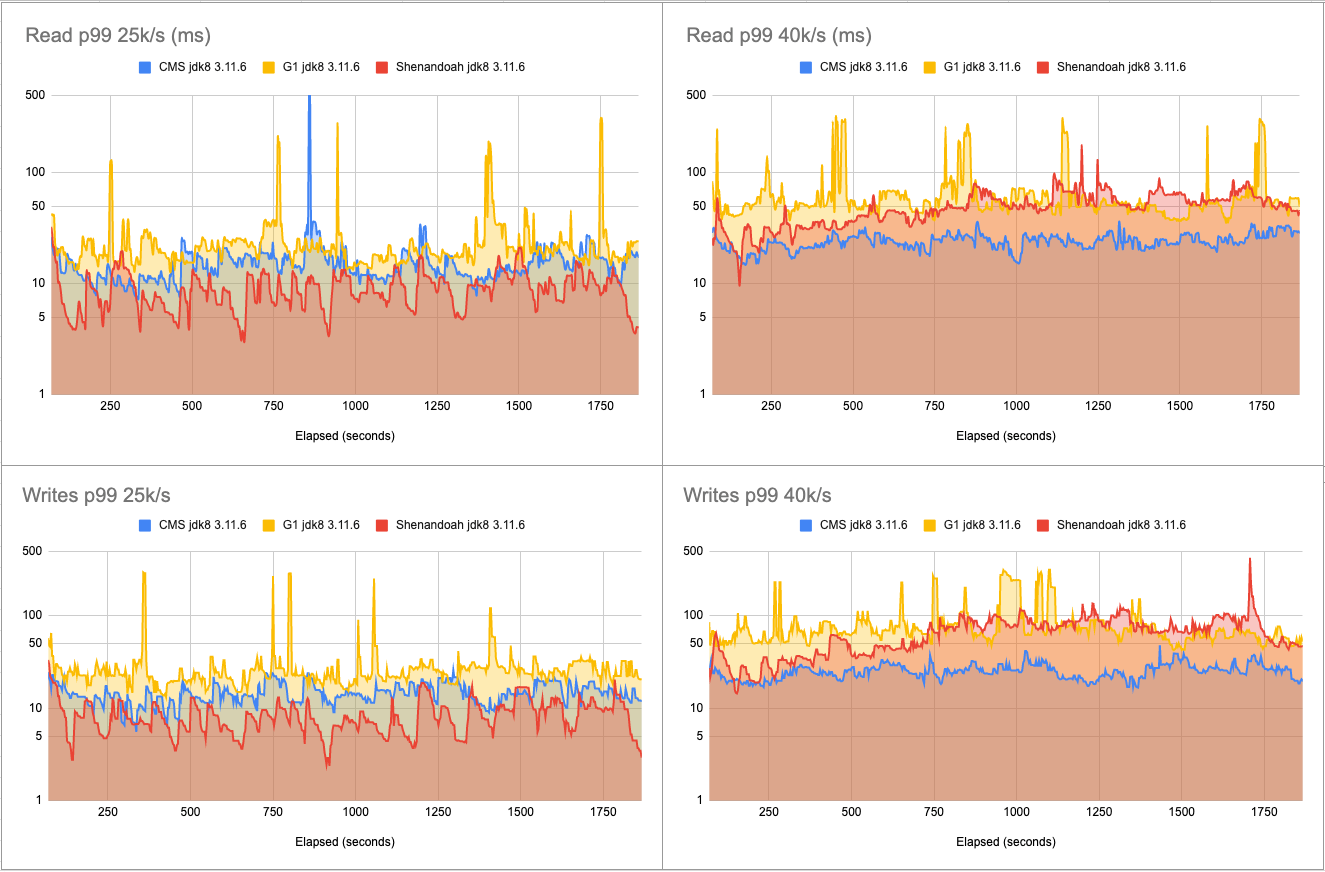

3.11.6 25k-40k ops/s

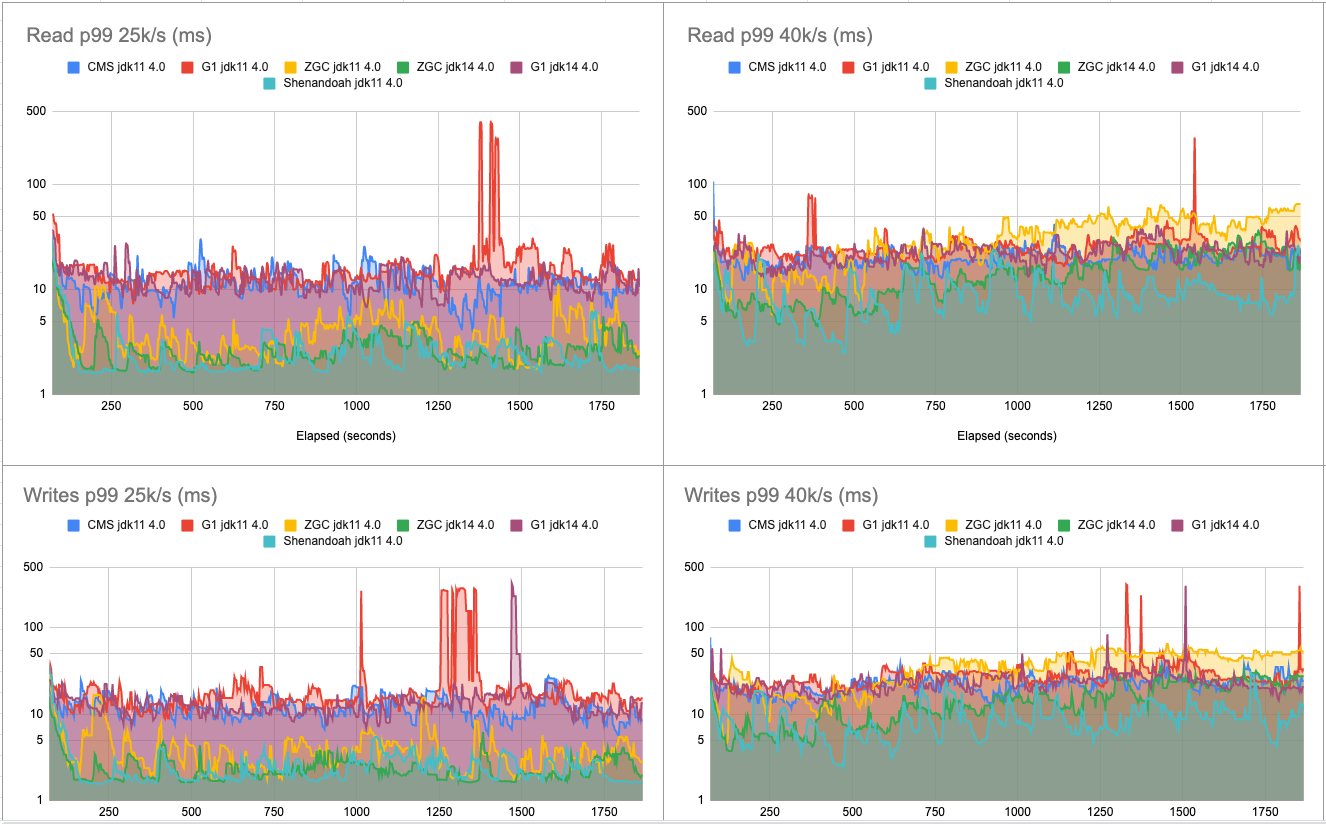

4.0 25k-40k ops/s

4.0 45k-50k ops/s

| Throughput | 25k ops/s | 40k ops/s | 45k ops/s | 50k ops/s | 55k ops/s |

|---|---|---|---|---|---|

| CMS 3.11.6 jdk8 | 24,222.80 | 38,519.94 | 40,722.02 | ||

| G1 C* 3.11.6 jdk8 | 24,222.61 | 38,736.61 | |||

| Shenandoah C* 3.11.6 jdk8 | 24,211.57 | 35,778.40 | |||

| ZGC jdk11 | 24,222.72 | 38,674.99 | 41,557.88 | ||

| ZGC jdk14 | 24,222.80 | 38,519.94 | 40,722.02 | ||

| Shenandoah jdk11 | 24,222.61 | 38,736.61 | 43,649.18 | 48,438.28 | 49,689.06 |

| CMS jdk11 | 24,211.57 | 35,778.40 | 43,385.94 | 47,901.46 | 51,512.48 |

| G1 jdk11 | 24,220.08 | 38,730.57 | 43,603.88 | 47,590.90 | |

| G1 jdk14 | 24,211.71 | 38,721.09 | 43,561.66 | 48,030.46 | 50,833.91 |

Throughput wise, Cassandra 3.11.6 maxed out at 41k ops/s while Cassandra 4.0 went up to 51k ops/s, which is a nice 25% improvement thanks to the upgrade, using CMS in both cases. There have been numerous performance improvements in 4.0 explaining these results, especially on heap pressure caused by compaction (check CASSANDRA-14654 for example).

Shenandoah in jdk8 on Cassandra 3.11.6 fails delivering the maximum throughput in the 40k ops/s load test and starts showing failed requests at this rate. It behaves much better with jdk11 and Cassandra 4.0. It can now nearly match the throughput from CMS with a maximum at 49.6k ops/s. Both G1 and Shenandoah, with jdk 8 maxed out at 36k ops/s overall with Cassandra 3.11.6.

G1 seems to have been improved in jdk14 as well and beats jdk11 with a small improvement from 47k/s to 50k/s.

ZGC fails at delivering a throughput that matches its contenders in both jdk11 and jdk14, with at most 41k ops/s.

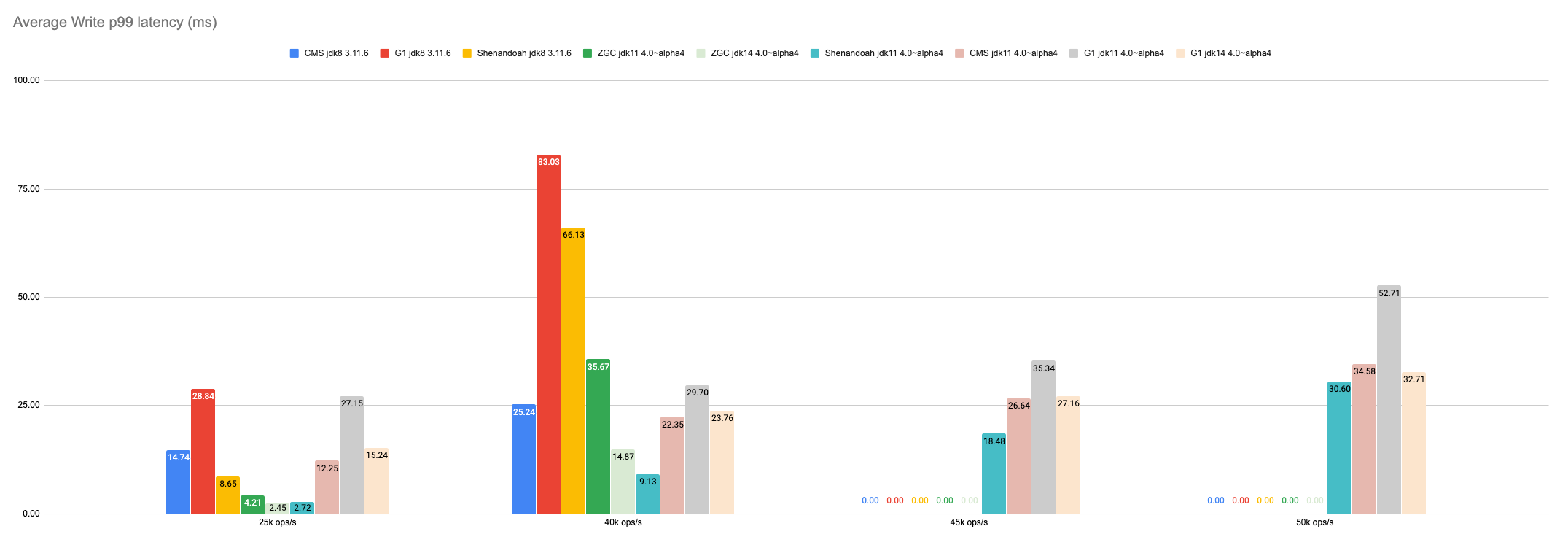

| Writes Average p99 | 25k ops/s | 40k ops/s | 45k ops/s | 50k ops/s |

|---|---|---|---|---|

| CMS jdk8 3.11.6 | 14.74 | 25.24 | ||

| G1 jdk8 3.11.6 | 28.84 | 83.03 | ||

| Shenandoah jdk8 3.11.6 | 8.65 | 66.13 | ||

| ZGC jdk11 | 4.21 | 35.67 | ||

| ZGC jdk14 | 2.45 | 14.87 | ||

| Shenandoah jdk11 | 2.72 | 9.13 | 18.48 | 30.60 |

| CMS jdk11 | 12.25 | 22.35 | 26.64 | 34.58 |

| G1 jdk11 | 27.15 | 29.70 | 35.34 | 52.71 |

| G1 jdk14 | 15.24 | 23.76 | 27.16 | 32.71 |

| Reads Average p99 | 25k ops/s | 40k ops/s | 45k ops/s | 50k ops/s |

|---|---|---|---|---|

| CMS jdk8 3.11.6 | 17.01 | 24.18 | ||

| G1 jdk8 3.11.6 | 25.21 | 66.48 | ||

| Shenandoah jdk8 3.11.6 | 9.16 | 49.64 | ||

| ZGC jdk11 | 4.12 | 32.70 | ||

| ZGC jdk14 | 2.81 | 14.83 | ||

| Shenandoah jdk11 | 2.64 | 9.28 | 17.37 | 28.10 |

| CMS jdk11 | 11.54 | 20.15 | 24.87 | 31.53 |

| G1 jdk11 | 19.77 | 26.35 | 48.38 | 42.41 |

| G1 jdk14 | 12.51 | 22.36 | 25.20 | 39.38 |

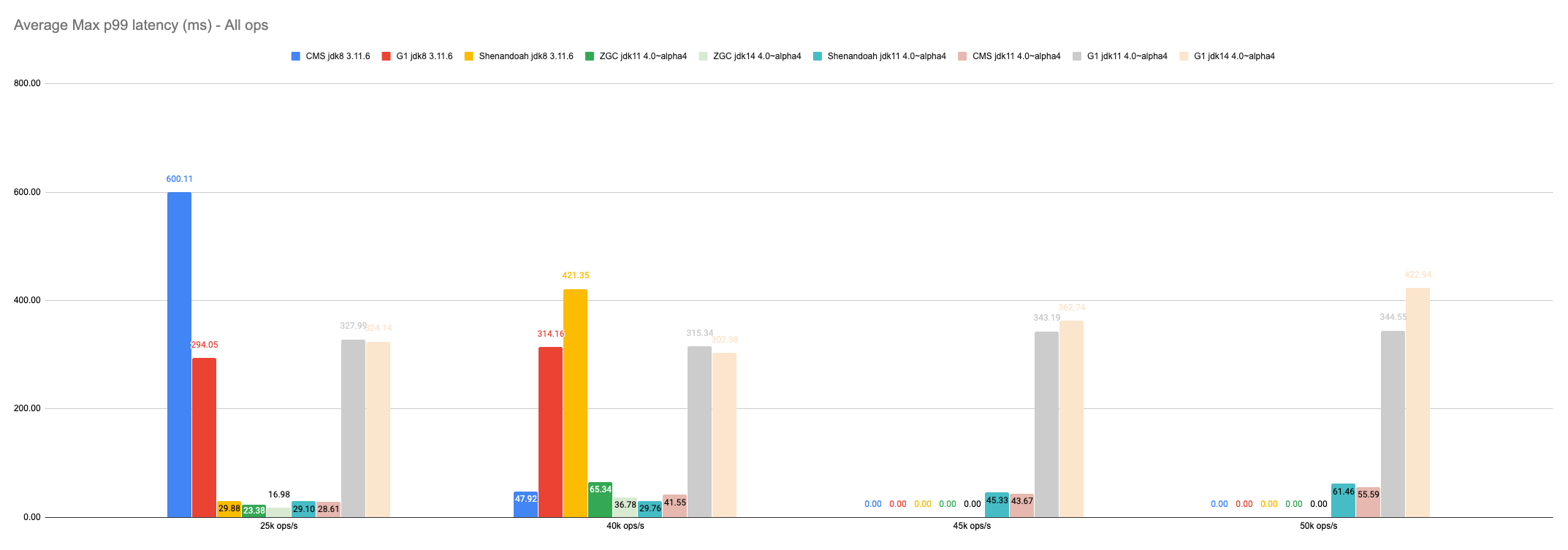

| Max p99 | 25k ops/s | 40k ops/s | 45k ops/s | 50k ops/s |

|---|---|---|---|---|

| CMS jdk8 3.11.6 | 600.11 | 47.92 | ||

| G1 jdk8 3.11.6 | 294.05 | 314.16 | ||

| Shenandoah jdk8 3.11.6 | 29.88 | 421.35 | ||

| ZGC jdk11 | 23.38 | 65.34 | ||

| ZGC jdk14 | 16.98 | 36.78 | ||

| Shenandoah jdk11 | 29.10 | 29.76 | 45.33 | 61.46 |

| CMS jdk11 | 28.61 | 41.55 | 43.67 | 55.59 |

| G1 jdk11 | 327.99 | 315.34 | 343.19 | 344.55 |

| G1 jdk14 | 324.14 | 302.98 | 362.74 | 422.94 |

Shenandoah in jdk8 delivers some very impressive latencies under moderate load on Cassandra 3.11.6, but performance severely degrades when it gets under pressure. Using CMS, Cassandra 4.0 manages to keep an average p99 between 11ms and 31ms with up to 50k ops/s. The average read p99 under moderate load went down from 17ms in Cassandra 3.11.6 to 11.5ms in Cassandra 4.0, which gives us a 30% improvement.

With 25% to 30% improvements in both throughput and latency, Cassandra 4.0 easily beats Cassandra 3.11.6 using the same garbage collectors. Honorable mention to Shenandoah for the very low latencies under moderate load in Cassandra 3.11.6, but the behavior under pressure makes us worried about its capacity of handling spiky loads.

While ZGC delivers some impressive latencies under moderate load, especially with jdk14, it doesn’t keep up at higher rates when compared to Shenandoah. Average p99 latencies for both reads and writes are the lowest for Shenandoah in almost all load tests. These latencies combined with the throughput it can achieve in Cassandra 4.0 make it a very interesting GC to consider when upgrading. An average p99 read latency of 2.64ms under moderate load is pretty impressive! Even more knowing that these are recorded by the client.

G1 mostly matches its configured maximum pause time of 300ms when looking at the max p99, but using lower target pause could have undesirable effects under high load and trigger even longer pauses.

Under moderate load, Shenandoah manages to lower average p99 latencies by 77%, with a top low at 2.64ms. This will be a major improvement for latency sensitive use cases. Compared to CMS in Cassandra 3.11.6, it’s a whopping 85% latency reduction for reads at p99! Honorable mention to ZGC in jdk14 which delivers some great performance under moderate load but sadly can’t yet keep up at higher rates. We are optimistic that it will be improved in the coming months and might eventually compete with Shenandoah.

Final thoughts

G1 brought improvements in Cassandra’s usability by removing the need to fine tune generation sizes at the expense of some performance. The release of Apache Cassandra 4.0, which brings very impressive boosts on its own, will allow using new generation garbage collectors such as Shenandoah or ZGC, which are both simple to implement with minimal tuning, and more efficient in latencies.

Shenandoah is hard to recommend on Cassandra 3.11.6 as nodes start to misbehave at high loads, but starting from jdk11 and Cassandra 4.0, this garbage collector offers stunning improvements in latencies while almost delivering the maximum throughput one can expect from the database.

Your mileage may vary as these benchmarks focused on a specific workload, but the results make us fairly optimistic in the future of Apache Cassandra for latency sensitive use cases, bringing strong improvements over what Cassandra 3.11.6 can currently deliver.

Download the latest Apache 4 build and give it a try. Let us know if you have any feedback on the community mailing lists or in the ASF Slack.