Can you use LangChain in JavaScript?

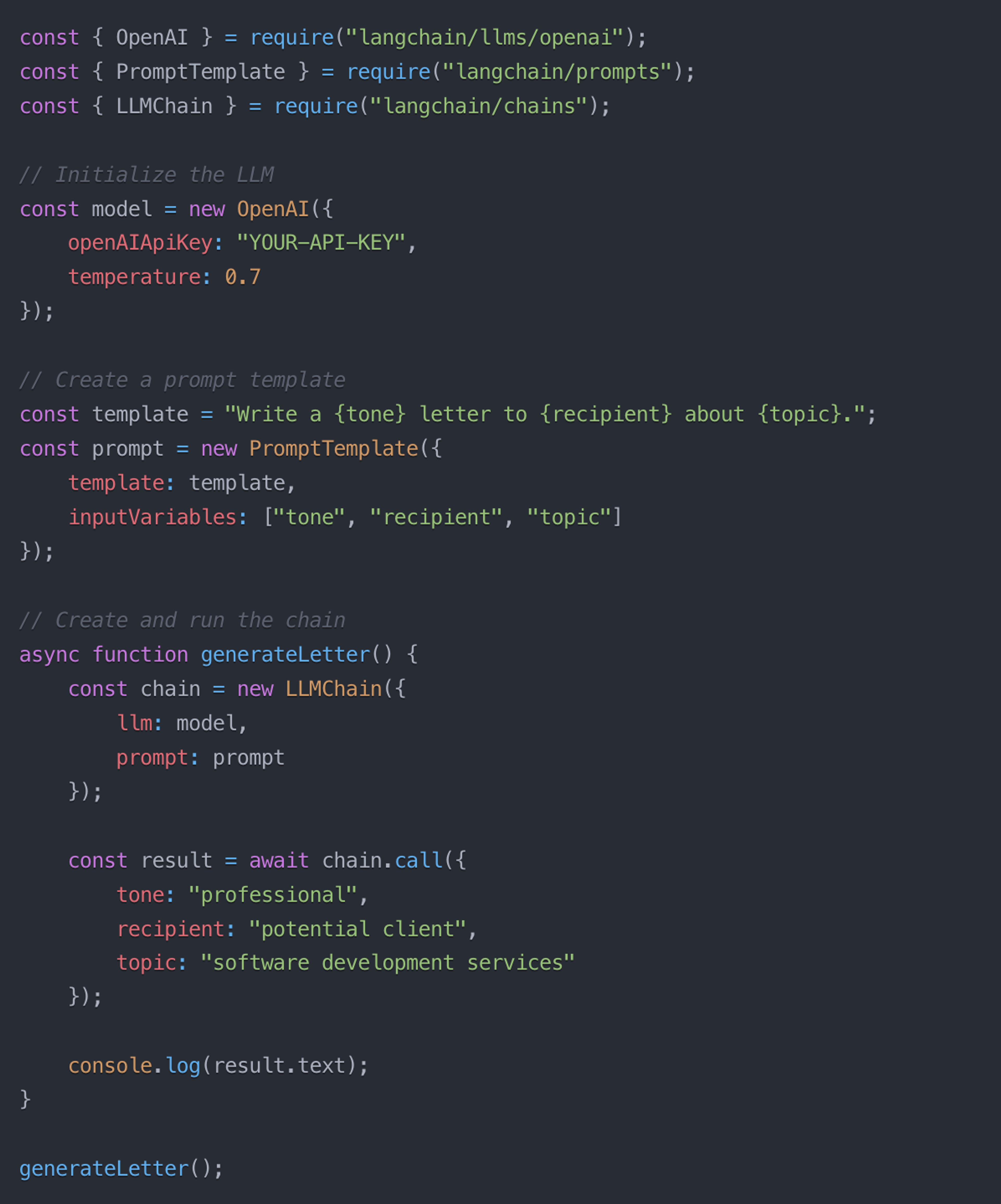

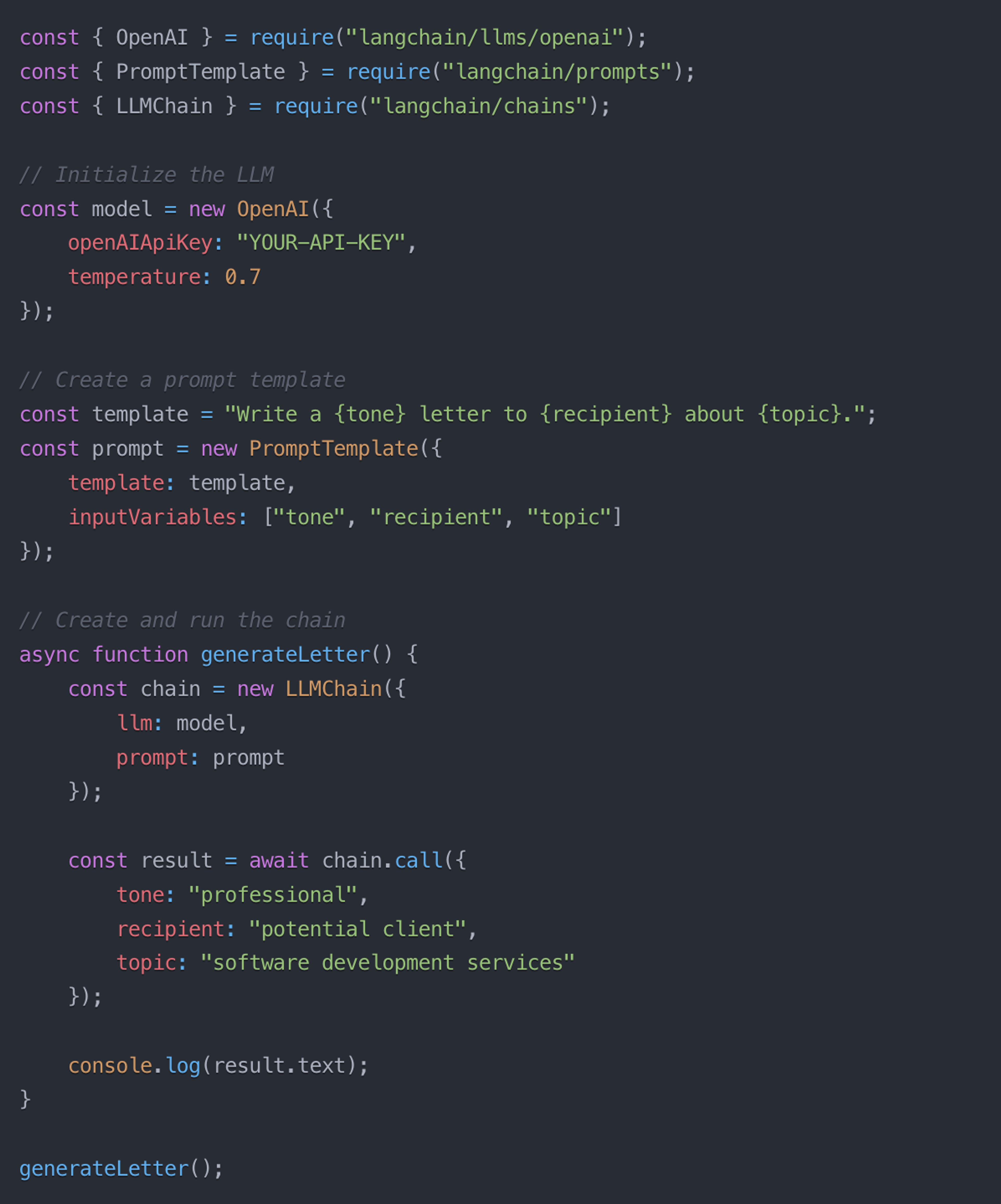

Yes! LangChain has a JavaScript/TypeScript version called LangChain.js that provides similar functionality to the Python version. Here is a basic example:

ChatGPT caught global attention at the end of 2022. Two years later, this AI-powered LLM gets 133 million visitors every month. So it's no wonder why developers are racing to incorporate this transformative technology into their products.

LangChain.js is a simple, modular framework with tools for developing applications powered by large language models. Build conversational AI, automate workflows, plug in context-aware retrieval strategies, and generate content that supports your application's cognitive architecture.

LangChain's open-source framework supports building production-ready AI apps with multiple value-added features for JavaScript-based applications. This reduces the time required to bring your AI apps from vision to prototype to production.

In this article, we’ll look at what LangChain is, the benefits of using LangChain JS, and how you can use it today to create high-performance GenAI apps that weave together large language models (LLMs) with external data sources.

Leveraging an LLM, you can quickly build GenAI applications based on existing foundational models. Combining calls to LLMs with your own data using techniques such as retrieval-augmented generation (RAG) provides the LLM with recent, domain-specific data, resulting in more relevant and accurate output for your users.

However, building reliable AI applications requires more than making a REST API call to a LLM. For example, you may need to interface with different LLMs based on their performance and how accurately they respond to specific queries. Then there’s all the supporting infrastructure your app might need, such as error handling, parallelization, building agents, and logging/monitoring.

LangChain is a framework, available for both Python and JavaScript, for developing applications that incorporate LLMs. The framework consists of various open-source libraries and packages that work together to enhance the application lifecycle for language model-driven tasks. Using LangChain, you can code GenAI apps that leverage LLMs, vector databases, and other critical components without coding out all the little details yourself.

LangChain JS brings the power of the LangChain framework to JavaScript applications. LangChain JS focuses on simplifying GenAI app development in three key areas:

Development. LangChain JS supports a number of out-of-the-box components for assembling GenAI apps quickly. You can add features such as streaming, route execution, message history, text splitting, parsing structured output, and more with less code than you’d use implementing everything on your own. You can also use LangGraph.js to build out stateful agents.

Productionization. LangChain JS supports LangSmith, which provides monitoring capabilities you can use to assess and fine-tune your app’s performance. LangSmith also supports an API you can call from the non-LangChain parts of your application.

Deployment. You can use LangGraph Cloud to turn your LangChain JS app into a callable, production-ready API that’s horizontally scalable and uses durable storage.

Before we dive into getting started with LangChain JS, let’s look at the components that LangChain supports. These components serve as building blocks for constructing your app using a simple declarative language.

Here are a few of the key components in LangChain JS you should understand before using it. For other components, see the LangChain JS conceptual guide.

Most modern LLMs operate as chat completion models - i.e., they take a series of messages as inputs and return generated chat messages as output. Responses are returned as messages that describe the role the chat model is assuming as well as the context of the message.

LangChain JS supports dozens of chat models and LLMs out-of-the-box, making it easy to test different models or leverage different models for different purposes. For example, you may use one model for user-generated chat and another model for creating structured JSON output.

One of LangChain’s more powerful features, agents provide decision-making capabilities, using the output from an LLM to determine the next action in the chain. You can use LangGraph.js to create complex agent and stateful multi-actor applications with support for cycles, controllability, and persistence.

Prompt templates take user or input and supply them as part of the instructions you send to a language model. Prompt templates support generating multiple message types and prompt outputs.

LangChain JS supports the LangChain Expression Language (LCEL), which defines a simple, declarative way to chain LangChain components together into a full, working AI application.

Using LCEL, you can quickly create a prototype app that includes anywhere from a few steps to over 100. You can then ship that prototype to production with few code changes. For example, the following call pipes a parameterized prompt template to an LLM and then pipes the output to a parser that reads and formats the response:

const chain = promptTemplate.pipe(model).pipe(parser);

LCEL and chains provide much of the value of LangChain JS. Using LCEL gives you streaming support, parallelization, retry and fallback logic, access to intermediate results, and tracing via LangSmith. That’s functionality you don’t have to develop and test yourself.

You can use tool calling to respond to a given prompt by generating output in conformance with a user-defined schema. LangChain JS support tool calling for all LLM providers that support it, providing a consistent interface across the models.

For models that don’t support tool calling, LangChain JS provides parsing via output parsers. LangChain supports built-in parsers for all popular structured text formats.

To get started with LangChain JS, install Node.js version 18 or higher, as well as one of the supported package managers - npm, Yarn, or pnpm. Then, install the LangChain JS library:

npm i langchain @langchain/core

(For non-npm examples, see the LangChain JS introduction on their website.)

Next, install the integrations you want to use. This will be the LLMs you’ll leverage in your app. For example, to install support for OpenAI, run:

npm install @langchain/openai

You can also install both packages simultaneously:

npm install @langchain/openai @langchain/core

Finally, perform any additional setup that your integrations require. The common example is setting an API key for your desired LLM. For example, for OpenAI, after creating a project and adding an API key, you can use it by setting the OPENAI_API_KEY variable:

OPENAI_API_KEY=your-api-key

(Note: It’s generally recommended to set this via code after retrieving it from a secrets vault supported by your cloud provider, such as AWS Secrets Manager, GCP Secret Manager, or Azure Key Vault.)

What you do next with LangChain JS depends on what you want to build. Here are a few examples of what you can create with LangChain JS and the capabilities you can leverage.

To create a basic chatbot, you can instantiate a model via LangChain using the following code:

import { ChatOpenAI } from "@langchain/openai";

const model = new ChatOpenAI({

model: "gpt-4o-mini",

temperature: 0

});

At its most basic, you can create a chatbot by making a direct call to the LLM:

await model.invoke([new HumanMessage({ content: "Hi! I'm Bob" })]);

You can then build on this to add more features and knowledge context. For example, you can leverage the message history feature in LangChain JS to save chat history to a data store, reload it in future sessions, and provide it to the LLM as context. That way, when the user next engages with the chatbot, the LLM can incorporate knowledge from past conservations into its responses.

LLMs provide great foundational language comprehension and generation abilities, coupled with a general knowledge based on large data sets. Their limitation is that they’re a snapshot in time. Their data is limited to the last time they were trained (usually a year or more old).

Additionally, your company has volumes of proprietary, domain-specific data - e.g., past customer support transcripts, product model information and documentation, etc. Sending this information to an LLM as part of the prompt ensures more relevant, accurate, and up-to-date output.

Retrieval-augmented generation, or RAG, incorporates external data in real-time based on user input. It incorporates information from documents, API calls, and other external data sources and feeds them to the LLM as part of the prompt.

One common implementation of RAG is to store information in a vector database, which enables fast, complex, and context-sensitive searches across diverse data sets. LangChain JS works integrated with multiple vector database systems so you can include RAG-derived data in your prompts with a few lines of code.

LangChain JS works with our own vector database solution, Astra DB, which provides data search with petabyte scalability. We provide a detailed code example that shows you how to import documents into Astra DB, search them on-demand with a user’s query, and then embed the results as context into your LLM call.

You can build more than chatbots with LangChain JS. You can use it to create sentiment analysis engines, structured content generation pipelines, and more.

LangChain JS also supports several third-party integrations besides LLMs - e.g., Redis for in-memory vector storage. It also supports multiple tools you can use to pull data from search engines, interpret code, automate tasks, and integrate with other third-party data sources such as Twilio, Wolfram Alpha, and more.

Using a framework like LangChain JS doesn’t eliminate the need to fine-tune your AI app for your specific use case. A few best practices include:

You can leverage the numerous tools and capabilities that LangChain JS exposes to build your GenAI application quickly from reusable building blocks. With our Astra DB LangChain JavaScript integration, you can easily store and query documents to increase the accuracy, timeliness, and relevance of LLM responses.

Get started today - sign up for a free Astra DB account and build your first LangChain JS app!

Yes! LangChain has a JavaScript/TypeScript version called LangChain.js that provides similar functionality to the Python version. Here is a basic example:

Both Python and JavaScript versions of LangChain have their strengths, and the "better" choice really depends on what you’re building.

Python LangChain advantages:

JavaScript/TypeScript LangChain advantages:

Choose Python if you:

Choose JavaScript if you:

If you're starting fresh, choose your version based on application type rather than the LangChain implementation itself.

LangChain is a framework that simplifies building LLM apps (like GPT-3, Whisper, or Anthropic's models) using sets of abstractions and tools specific to LLM applications. Some of the key use cases for LangChain include:

Conversational AI:

Build conversational AI agents and chatbots that can engage in natural language interactions. This includes features like memory, multi-turn conversations, and chaining multiple LLMs together.

Question answering:

Tools build QA systems that can answer questions by pulling information from various data sources like documents, databases, or APIs.

Task automation:

Build applications that can understand natural language instructions and break them into automated steps or workflows.

Content generation:

Generate high-quality text content like articles, stories, or product descriptions using LLMs.

Summarization and analysis:

Build systems that summarize long documents or analyze text to extract key insights and information.

Knowledge representation:

Use abstractions for representing and reasoning about structured knowledge, which is useful for building knowledge-intensive applications.

Multimodal interactions:

Integrate LLMs with other AI modalities like computer vision, speech recognition, and even robotic systems.

LangChain.js provides tools to build both stateful and stateless agents. Stateful agents maintain context and memory across multiple interactions, allowing for more natural, conversational experiences. Stateless agents, on the other hand, process each input independently without retaining state, which can be more appropriate for certain use cases.

LangChain.js provides a range of base abstractions, such as agents, chains, and prompts, that allow you to easily define and compose the different modeling steps required for your application. This modular approach makes it easier to build, test, and iterate on your LangChain.js applications.

Yes, LangChain.js is designed to be used in a variety of deployment environments, including edge functions. This enables you to build LLM-powered applications that can run closer to the user, providing low-latency responses and improved performance.

Use LangChain LangGraph to build stateful agents with first-class streaming and human-in-the-loop support. LangChain.js and LangGraph work seamlessly together, allowing you to build highly robust, knowledge-powered applications. By leveraging the base abstractions and modeling capabilities of LangChain.js, you can create LangGraph applications that are not only feature-rich but also scalable, maintainable, and reliable.

At its core, LangChain.js simplifies the process of building applications with large language models (LLMs). Key features and capabilities include:

Monitor key metrics, such as response times, success rates, and user engagement, and the option to conduct A/B tests and other comparative analyses to continuously optimize your LangChain.js-powered solutions.

While LangChain.js doesn't provide built-in graph capabilities, it can seamlessly integrate with LangGraph, a complementary framework developed by the same team. LangGraph specializes in representing and reasoning about knowledge graphs, allowing you to incorporate rich, graph-based structures into your LangChain.js applications.

LangChain.js doesn't directly manage graph-based structures. That functionality is handled by LangGraph. Integrate LangGraph to leverage graph-based data structures, such as nodes, edges, and relationships, to power your applications.