Hello, fellow dev nerds! 👋 Inspired by some intriguing displays at GitHub Universe, we embarked on a mission to create an app that generates unique, visually stunning "CodeBeasts" based on your GitHub activity. Let's dive into how this app, built with Langflow, Python, Flask, and Lovable.dev, brings your coding history to life!

The inspiration behind CodeBeasts 🌟

At GitHub Universe, two displays caught my eye. One was a cool enclosure that explored your personal GitHub history, and the other were postcards generated from repo activity.

One of the author's many cards from GitHub Universe

One of the author's many cards from GitHub Universe

These brought a personal touch to coding history, and I thought, "Why not create an app that generates ‘CodeBeasts’ based on this same activity? These beasts represent coding languages as animals (based on O'Reilly’s animal choices as a reference), using AI vision models like Dall-E and Stability AI to craft them.

How CodeBeasts works 🧠

The application is built on Python with Flask, with a frontend generated using Lovable.dev (a great resource for backend developers who need help with frontend design), and agentic AI powered by Langflow.

Here's the process:

-

Enter your GitHub handle

-

Choose an AI vision model (Stability AI or DALL-E)

-

An AI agent scans your GitHub repositories

-

The agent identifies your most-used programming languages

-

Each language is associated with an animal (based on O'Reilly animal references)

-

Python = Snake 🐍

-

TypeScript = Blue Jay 🐦

-

JavaScript = Rhino 🦏

-

A bunch of others ...

-

It then generates a prompt for a "CodeBeast" combining all the animals together

-

Finally, it passes GitHub details and the prompt to the app for image generation and displays the result

-

You can download or share your unique creation on Twitter

The magic behind the scenes: Langflow 🔮

The real magic happens through an agentic flow built with Langflow - a visual IDE for building GenAI and agentic workflows in a drag-and-drop, low-code/no-code way.

Langflow makes it very easy to create generative/agentic AI workflows that you can hook up to your application. Then you can quickly iterate with different LLMs and logic without needing to change any of your app code.

(Here's a link to the flow file. Download and import it into your own Langflow instance to try it out!)

Components in the Codebeasts Langflow flow:

🟢 Input/Output (Green)

-

GitHub handle input

-

Final payload output for image generation

🟤 Data Storage/Retrieval (Beige)

-

Stores GitHub user details in Astra DB to avoid expensive API calls on subsequent runs

-

Retrieves stored data when a user is matched in the database

🟣 Conditional Logic (Pink)

-

Uses the if-else component to determine the flow path

-

If user is new → Full agentic flow with GitHub API calls

-

If user exists in database → Pure LLM path (faster)

⚪ Post-Processing (Gray)

-

Creates a structured payload for the application

-

Ensures consistent output format for the UI

🔵 Agentic Components (Blue)

-

Coding Language Agent: Gets languages from GitHub repos

-

Profile Details Agent: Gets basic GitHub profile information

-

Main Agent: Combines all information to create the final prompt

Why this architecture matters 📊

This setup contains both agentic and purely generative paths along with data storage, but why do we need to take this approach at all?

The guiding light is all about reducing the amount of GitHub API calls we need to make, especially when dealing with GitHub profiles with many repositories. While there’s an API to get repo languages, you still need to iterate through each repo to get those languages. This turns out to be a pretty expensive operation in terms of time and the amount of LLM tokens needed to analyze the output and fetch all of the languages.

Not only that, but this kind of information doesn’t really change over time unless new repos are added. It’s a waste to constantly rescan the same GitHub profile. This is where the storage mechanism comes in. Scan once, analyze and massage the data, format it, then store it. On a subsequent run we already have the data and can get to generating our codebeast a lot faster with a lot fewer tokens using a regular LLM call to generate a new prompt, with no agent needed.

To sum up, here’s the overall architecture:

-

Modular agents - Building specific, focused agents rather than one large agent

-

Conditional logic - Using different paths based on input criteria

-

Efficiency - Storing user data to avoid redundant API calls

-

Structured output - Ensuring consistent payload format for the application

{

"languages": ["Python", "JavaScript", "HTML", "Java"],

"prompt": "Create a chimera with snake, rhino...",

"github_username": "https://github.com/SonicDMG",

"num_repositories": 30,

"animal_selection": [['Python snake', 'representing adaptability and wisdom'], ["..."]],

"github_achievements": []

}A pared-down example of the output payload

Do we really need to use an agent at all?

In the last section, I mentioned using an agentic flow to interface with the GitHub API. We use this to fetch details about both the GitHub profile and the languages used in their repos. However, do we need to use this approach? Is there some requirement? Could we have used a more traditional approach that simply reads from the API and parses the results?

The short answer is, yes, we could have used a more traditional approach. There is no requirement that says we must use an agent. It comes down to weighing the pros and cons that work best for your situation and expertise.

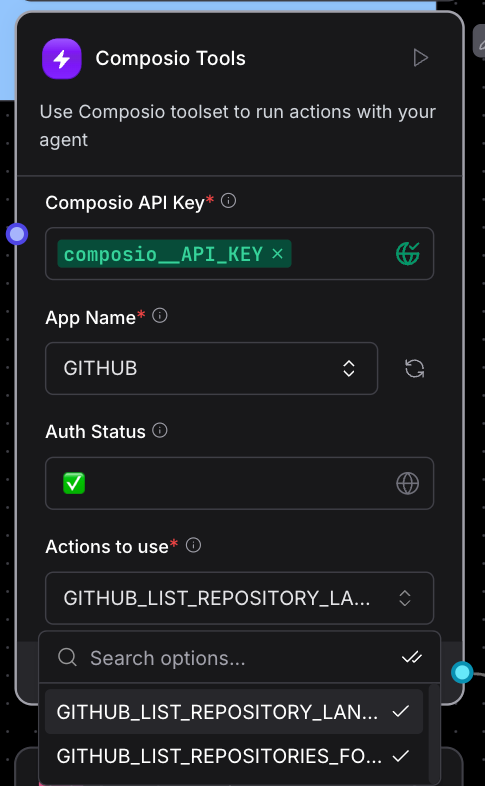

In our case, the agent and tools used made it very easy to authenticate, fetch data, and combine the results from the GitHub API. For example, after I added a tool action to list out the repos, it was only a matter of adding another “language” action for the agent to magically put it all together and grab the languages for each repo.

Leveraging session IDs in Langflow 🔑

Any time I’m building applications that need to track unique user information in Langflow, I immediately start using the “session_id” parameter in my input payload.

Using session IDs in Langflow, as demonstrated in this video, provide some really useful benefits:

-

Preventing model confusion - Isolating user contexts to prevent confusion in AI models and decrease LLM token usage.

-

Scaling considerations - Using external memory (Astra DB, Redis, etc.) for persistence is better for scalability.

payload = {

"input_value": message,

"output_type": output_type,

"input_type": input_type,

"session_id": message.lower() <-- Add session ID to the payload, Langflow does the rest

}By using session IDs, you can create a more robust application, ensuring that each user experience is personalized with fewer API calls and reduced LLM token usage. It’s also extremely easy to set up and use.

Tools and frameworks used

Finally, here are the various tools and frameworks we used for Codebeasts.

I want to give a specific callout to Lovable.dev. As someone more focused on backend development, it enables me to iterate very quickly on nice-looking frontends using modern frameworks.

I also came across Logfire when it first released and now it’s a staple in all of my Python apps. It integrates nicely with Flask out of the gate which saves me a bunch of time when setting up logging.

Backend

-

Flask: Lightweight web framework for server-side logic

-

Logfire: Application logging and debugging

-

Langflow: Visual IDE for building agentic workflows

-

OpenAI API: DALL-E integration for image generation

-

Stability AI API: Alternative image generation styles

-

Requests: Simple HTTP library

-

Pillow (PIL): Image manipulation library

Frontend

-

Lovable.dev: Frontend design for backend devs

-

React: JavaScript library for interactive UI

-

NProgress: Progress bars for loading states

-

Toast Notifications: User feedback on request status

Integrating AI image generation

-

Stability AI: Uses detailed prompts for generating pixel art-style images (see docs or code)

-

OpenAI DALL-E: Similar setup for pixel art images (see docs or code)

Try it yourself! 🚀

I hope this was a useful and fun example of something you can build using LLMs and agentic AI with image generation to boot. I love seeing the beasts everyone is creating.

Want to see what your coding history looks like as a mythical beast? Head over to codebeasts.onrender.com and generate your own unique CodeBeast!

And as always, happy coding!

Have you created your own CodeBeast? Share it on social media or just use it for yourself! And if you're interested in building similar applications, check out Langflow and Astra DB to get started on your own agentic AI journey!

FAQ

What is CodeBeasts?

CodeBeasts is a fun, visual app that generates unique mythical creatures based on your GitHub activity, representing your favorite programming languages as animals.

How does CodeBeasts work?

You enter your GitHub handle, select an AI vision model (Stability AI or DALL-E), and the app scans your repositories to identify your most-used languages. It then generates a "CodeBeast" combining these animals.

What technologies power CodeBeasts?

The app is built using Python, Flask, and Langflow for backend processing, and Lovable.dev with React for frontend design. Image generation uses Stability AI and OpenAI's DALL-E.

What is Langflow, and why use it?

Langflow is a visual IDE for building generative and agentic AI workflows. It simplifies creating complex AI flows, enables quick iteration, and integrates seamlessly with applications.

What are the benefits of using session IDs in Langflow?

Session IDs personalize user interactions, prevent confusion in AI models, reduce LLM token usage, and support scalable user-data management.

Why store user data?

Storing user data in databases like Astra DB minimizes redundant GitHub API calls, making subsequent runs faster and more efficient.

Can I create my own CodeBeast?

Absolutely! Visit codebeasts.onrender.com to generate your unique coding chimera.

Where can I find the flow file?

You can download and import the Langflow flow file from Langflow.new.

Which frameworks and libraries are used?

-

Backend: Flask, Logfire, Langflow, Requests, Pillow

-

Frontend: Lovable.dev, React, NProgress, Toast notifications

-

AI Integration: Stability AI API, OpenAI DALL-E API