Apache Cassandra™ is the most scalable database on the planet. Its write latency and throughput are unmatched, and it is resilient to many of the failure scenarios that are commonplace in the distributed, cloud-based world we find ourselves in today. Cassandra is well-known for how easy it is to scale up, but elasticity is a key part of being cloud-native, and that includes the ability to scale down easily as well. Unfortunately, Cassandra’s architecture requires that when we scale down, we do so for both compute and storage, which leads to challenging tradeoff decisions for users.

Cassandra’s resilience comes from running a triplicate of server nodes, and the fast writes are dependent on fast disks, both of which add considerably to the tradeoff discussions. After running the first iteration of DataStax Astra for over a year, we tasked ourselves with an ambitious goal to give our users a database as a service that retained all of the positive aspects of running Cassandra for production use cases with as few of the downsides and complexities as possible. Some of the questions we asked ourselves to frame the journey ahead were:

- How can Cassandra become better suited to the data needs of modern application developers, similar to what Kubernetes has delivered for the application layer?

- How can Cassandra retain its scalability while becoming more elastic to changing workloads?

- How do you tap into the power of Cassandra but only pay for what you use?

- How do we further reduce the already low operational burden of a NoOps DBaaS?

- And how can all of this be possible while keeping it fast, reliable, and efficient?

“We should make it serverless!”

A critical component of making Cassandra truly elastic would need to be the separation of compute and storage. Big data analytics solutions like Hadoop, Spark, Presto, and Snowflake have been using this trick for years, separating compute to commodity servers while using cheap dedicated storage like S3 to store terabytes of data for a fraction of the cost of combined enterprise-grade infrastructure that most traditional data warehouses had been built on. We’d need to do something similar if Astra was going to be cost-competitive storing massive quantities of data. Simultaneously, data would have to be written extremely quickly; the latest cloud servers with super-fast attached NVMe volumes fit the bill nicely.

Since modern cloud-native applications increasingly rely on the power of Kubernetes for scalability and workload orchestration, we knew that it should have to not only run on top of Kubernetes but integrate natively to achieve the same elastic scalability characteristics of those same cloud-native apps would rely on us for. The side benefits of these architectural choices reinforce each other. Fast NVMe coupled with cheap, nearly infinite, object storage means data could be accepted incredibly quickly and stored durably in a very cost-effective manner. Durable, shared object storage would give the system the ability to promptly bootstrap new nodes and have them ready to serve data orders of magnitude faster than streaming data between nodes ever could. Cloud-native deployments on Kubernetes would provide the ability to right-size the compute to our users’ immediate workload needs. All of this pointed to an obvious conclusion: we were going to make Cassandra serverless.

Why is a serverless database good for users?

The most obvious benefit to users is cost-effectiveness. By taking the concepts we want to apply to Cassandra and making them available in Astra, we now have a service with a cost structure that’s uniquely correlated to the needs of the application. When demand is high, it scales up the number of nodes to meet the need, and when demand drops, it scales back down. Coupling that elasticity with efficient long-term storage, we can charge users by the operation instead of charging users for idle hardware.

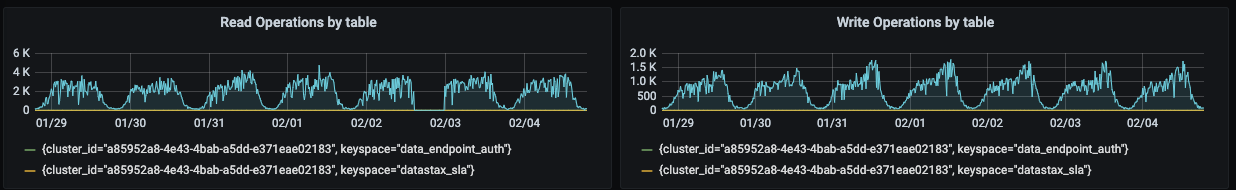

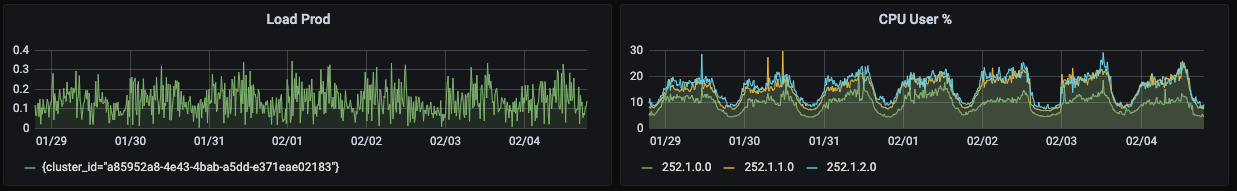

Consider the workload shown below, which is very typical of consumer applications. The load on the DB follows the circadian rhythm of the people it is serving: higher peaks of load during the daytime, with increased load at the end of the day when dinner is over, and people are most likely to use their mobile phone. In a typical DB deployment, the Astra customer would pay the same for the database when it was in high demand in the evening as when it is at almost zero demand during the night, so each one of those troughs is inefficient and wastes valuable capital.

This problem is compounded by the fact that developers need to be ready for seasonal peaks and growth, which means only 30% of the hardware capacity is being used. So over 70% of the system is idle during the peak hours and over 90% of the capacity is idle during low usage periods!

Astra automatically scales in response to the workload, leading to more efficient operations and, in some measured cases, over 70% savings, which we can pass on to our users!

More dev, less ops

At DataStax, we believe in the philosophy that we need to let builders build. Engineers overburdened by operations are not building, and generally not as happy as they are when developing solutions to solve challenging business problems. Our goal was to reduce most of Cassandra’s operational overhead when we first developed Astra on dedicated instances, but some of it was unavoidable. High infrastructure costs meant users were either forced to develop on local databases or squeeze onto a shared instance. Setting up databases to support non-prod environments and CI/CD deployment pipelines was time-consuming and expensive. In fact, it is not uncommon for enterprises to invest as much or more in non-production hardware and software and yet always find capacity outstripped by the needs of many engineers working on many independent microservices. With Astra Serverless, those problems and inefficiencies are gone. Because users only pay for the operations they consume and the data they store and transmit, each developer in an organization can have their own Cassandra database, and non-prod instances are easy to set up and tear down with the Astra DevOps API.

We’ve also revamped our security model and released a new version of Astra Identity Access Management (IAM) that simplifies the Cassandra security model by adding an easy-to-use and comprehensive permission system suitable for everything from a new application to the most stringent enterprise requirements.

Astra still comes with all of the developer friendliness of the Stargate data gateway’s REST, GraphQL, and JSON Document APIs built-in. As an added productivity boost, Astra now supports external JWT token providers so developers can leverage existing authentication providers like Auth0 directly. This reduces the burden of adding complex user management, authentication, and authorization logic into your application.

Tuned performance and improved reliability

Database performance tuning has been a high art form as long as we’ve had databases, and Cassandra is no exception. Historically, to get the very most out of Cassandra, a database needed to be tuned to the anticipated workload. Optimizing for high-write workloads was different from optimizing high- read or hybrid workloads and as data size on disk grew, compute would have to scale correspondingly. Part of the workload sensitivity can be traced to Cassandra’s monolithic architecture. All of the functions the database needs to perform: coordination, reads, writes, compaction, repair—all had to be performed in a single JVM process. To build serverless in Astra, we recognized early on that those functions could be broken out into their own processes and orchestrated independently. Data storage nodes are now independent processes and can be scaled independently to meet the workload demands. With Cassandra, read latency can suffer when compaction gets delayed, but by compartmentalizing the compactor, compaction and repair can now be scaled up and down as necessary to make reads as efficient as possible—regardless of write throughput. Finally, coordination is delegated to a multitenant optimized version of Stargate that enables us to run Stargate as a shared fleet and not dedicated coordinators. This allows for true horizontal scalability at the application service level, ensuring regardless of the workload size, there will always be a node available to service the request.

Once we had compartmentalized Cassandra’s subprocesses, we set to work building out the native Kubernetes integrations that would facilitate Astra’s dynamic scalability. The team finally settled on an architecture that was orchestrated by a pair of native Kubernetes operators that share the duty of provisioning and orchestrating all of the compartmentalized components as well as supporting subsystems like Prometheus and Grafana for metrics collection and visualization, shims to support IAM and Astra’s automation mechanisms, and internal management services. Another benefit of the move to Kubernetes is that it relies on etcd for consistent metadata, which Astra also leverages to makes cluster node membership and schema changes immediately consistent across all nodes in the cluster and eliminating the issues that can occur with changing node topology brought on by scaling and elasticity as well as issues brought about by inconsistent schemas across nodes.

What’s next?

Our engineers are hard at work optimizing Astra for even better performance, scalability, and cost-effectiveness while also preparing for our next release which will bring features like CDC and multi-region replication into the serverless world. While doing this, our engineers are also writing a new series of blog posts and papers that dive deep into the technical implementation details that we will begin publishing in our DataStax How We Built This series.

At the same time, we are getting ready to propose the changes we’ve made to Astra Serverless into the Apache Cassandra project as part of the CEP process so the entire Cassandra community can begin to take advantage of the benefits built into DataStax Astra. In the meantime, we encourage you to give Astra a try. It’s free for the first $25 worth of usage—which is 30 million reads, 5 million writes, and 90GB of storage—for free, every month, forever. All we ask in return is you give us any and all feedback so we can continue to improve Astra and make it the best DBaaS on the planet.

Finally, as you can imagine, building and improving Astra has been and will continue to be a massive technical challenge and we’re looking for top engineers of all stripes to help us along our journey. If you feel like you’re up for this type of challenging and fulfilling work, drop by our Careers page.