OpsCenter: An Architecture Overview

OpsCenter is a great tool for managing and monitoring your Cassandra and DataStax Enterprise clusters. In this post we'll dive a bit deeper into the architecture to get a better view into how OpsCenter works. Understanding how the different components of OpsCenter work and interact should be useful for both users and administrators.

There are three main components of OpsCenter:

- The User Interface

- The OpsCenter Daemon

- The OpsCenter Agent

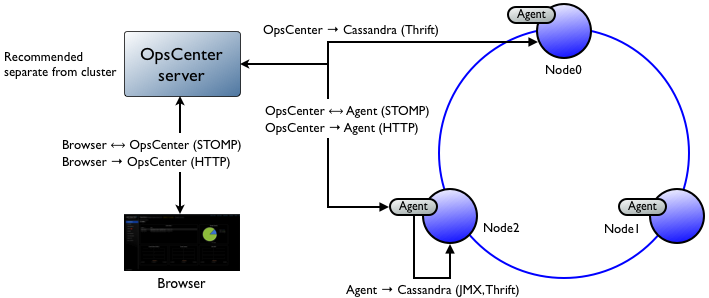

Hopefully the diagram below helps to illustrate the different ways that each of these components interact with each other and Cassandra. It should be useful as a reference when reading about each individual component.

Diagram

User Interface

This is the main entry point for interacting with OpsCenter. It consists of javascript (specifically dojo), css, and html. The UI is completely self contained in that it is served to the user's browser when OpsCenter is loaded, and from that point the javascript interacts with the API exposed by the OpsCenter Daemon.

OpsCenter Daemon

This is the main OpsCenter Process. It is responsible for:

- Serving the OpsCenter UI

- Pushing new information to the OpsCenter UI

- Exposing a management API

- Managing OpsCenter Agents

- Interacting with the Cassandra cluster via thrift

The first few responsibilities of the OpsCenter daemon, relate to interaction with the UI (and possibly other clients). OpsCenter runs an HTTP server in order to serve the UI component of OpsCenter to users' browsers. Once the UI has been served, the rest of the interaction with the daemon will occur either via the REST API or via STOMP. The OpsCenter daemon exposes a REST API in order to keep a clean separation between the components of OpsCenter as well as to allow advanced users to interact with a more programmatic interface if they wish to. The only additional interaction between the UI and the daemon is via STOMP. This is used to allow the daemon to "push" updates to the UI without the UI needing to specifically make an HTTP request. This is useful for things that need to be updated very frequently. For example, server load in the UI is updated every 5 seconds.

The other responsibilities of OpsCenter relate to actually managing/monitoring the Cassandra cluster. The daemon is the central point for cluster information to be aggregated, so that it can be exposed to the UI coherently. Some of this can be done independently by the daemon (without the agents) via thrift. The information gathered over thrift includes things like basic cluster information (node/token list, partitioner, snitch), schema information, and column family data. For additional information, the daemon relies on the OpsCenter Agents to be installed on the Cassandra nodes themselves. The interaction between the daemon and the agents is quite similar to how the UI interacts with the daemon. Agents expose a REST API for the daemon to query, as well as pushing frequently updated data (like server load) to the daemon.

OpsCenter Agent

The OpsCenter agent is the process that OpsCenter runs on each server in the Cassandra Cluster and is responsible for:

- Exposing an interface for the OpsCenter daemon to manage that node

- Sending information about that node to the OpsCenter Daemon

- Collecting metrics about that node

- Writing metric data to Cassandra via thrift

The main reponsibility of the agent is to collect information about the node it is monitoring. The information is then used in a number of ways. Much of the information is used by the OpsCenter daemon, either from the agent pushing data to the daemon (like server load) via STOMP, or from the daemon querying the REST API that the agent exposes. Additionally the agent collects metric and performance information from the node so that users can visualize performance graphs in the UI. The performance information that the agent collects is then stored to the Cassandra cluster using thrift. The agent will connect to the Cassandra node it is monitoring via thrift to insert data.

The other main responsibility of the agent is to expose a management interface for the Cassandra node it is monitoring. Cassandra exposes quite a few operations for managing cassandra nodes (compact, flush, repair, etc) via a protocol known as JMX. There are a few reasons that it is difficult for the OpsCenter daemon to interact with that interface. Mainly that the daemon is written in Python and there are firewall/networking concerns. For this reason, the agent acts a proxy for interacting with those management functions so that the OpsCenter daemon can access them.

There are a couple additional points worth noting. First, as described above, the agent only interacts with Cassandra by interacting with the local node it is running on. This generally avoids any firewall or networking concerns between the agent and Cassandra. Secondly, its worth noting that Clojure is a langauge that runs on the JVM. This makes interacting with Cassandra (also running on the JVM) much easier than if the agent were written in a non-JVM language (for example, Python).

A Note on SSL

One part left out of the description and diagram above is encryption of the different communication types via SSL. By default, OpsCenter encrypts some of this communication for security purposes. It is also possible to optionally configure ssl encryption for other types of communication.

Configured with SSL encryption by default:

- STOMP between OpsCenter Daemon and agent

- HTTP between OpsCenter Daemon and agent

Optional support for SSL encryption:

- HTTP between the browser and OpsCenter daemon

- Thrift between OpsCenter and Cassandra (available in DataStax Enterprise)

- Thirft between agent and Cassandra (available in DataStax Enterprise)

Conclusion

Hopefully this gives a better understanding of the internal structure of OpsCenter.