Recently, several software vendors (including DataStax) announced new end-to-end retrieval augmented generation (RAG) solutions (OpenAI, Pinecone, Weaviate). This is evidence that the market is starting to mature; instead of assembling a RAG solution from a set of bespoke parts (an LLM or a vector database, for instance), customers want a complete solution encompassing all the components necessary for RAG.

What is LangChain?

LangChain is an open source framework for developing applications powered by language models. It enables applications that:

- Are context-aware, as they connect a language model to sources of context (prompt instructions, few-shot examples, content to ground its response in, for example)

- Can reason, as they rely on language models to provide answers based on provided context, what actions to take, etc.

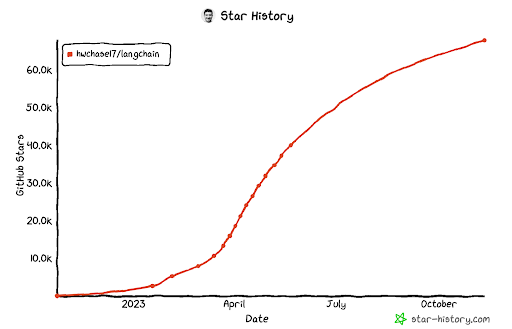

LangChain is also one of the most popular open source projects ever, with massive adoption among startups and large enterprises alike:

LangChain and the enterprise

Approximately 87% of DataStax’s vector search production customers use LangChain. Getting to production for generative AI is significantly more challenging than building a prototype. In particular, multiple advanced RAG techniques are required to ensure that the answers are not hallucinations and can leverage both structured and unstructured data. For our production customers, LangChain is a godsend because it enables them to move fast and implement advanced RAG techniques. For a full deep-dive, watch our recent webinar on Skypoint’s journey to production with Astra DB and LangChain.

As LangChain has grown, it’s also becoming clear that a single package isn’t the final form the LangChain ecosystem needs to evolve to when it comes to enterprise requirements. LangChain already has grown to over 500 integrations, and has implemented advanced prompting techniques from dozens of research papers. In this rapidly progressing ecosystem, it’s tough to keep all of those in sync and fully tested. As such, LangChain has already started to make the package more modular.

This started with LangChain Templates, which are end-to-end examples for getting started with a particular application, and continues with LangChain-Core, as well as an effort to split out integrations into separate packages to allow for companies like DataStax to independently add features in ways that don’t break the project. DataStax also hopes to help do more benchmarking to determine which RAG techniques (FLARE and multimodal, for example) work in practice, both in terms of AI accuracy and latency.

Why we’re betting on LangChain

Beyond the rocketship adoption of LangChain by the developer community, there are several important reasons why we are betting on LangChain.

Speed of implementing advanced RAG techniques

Getting to production is a real challenge for many customers due to poor LLM responses, poor data quality issues, or latency issues. Many of these issues are being actively addressed by the research community (e.g Google Deepmind), however only LangChain and LlamaIndex are implementing these research papers for production settings. In particular, LangChain has implemented many advanced reasoning techniques including ReAct and FLARE that significantly reduce hallucinations.

We can contribute to the community to drive enterprise adoption

Having run a business built on open source technology for 13 years, we believe we can contribute technical expertise to the project, especially in the area of building features for the most demanding enterprise use cases.

"We're excited to work with Datastax on pushing the boundaries of LLM applications. They understand a lot of subtleties around RAG and have been an excellent partner in striving to deliver value in this nascent ecosystem," said LangChain cofounder and CEO Harrison Chase.

Conclusion

Although many other companies have taken an approach to implement their code for their end-to-end RAG solution, DataStax has chosen to put its efforts into LangChain because we love how the project is evolving, and we have something to contribute. If you want to try our RAG stack solution with LangChain, read more here.