We recently created an exciting multiplayer movie trivia game that combines real-time gaming with AI-generated questions. Here's how we put it together.

The core components

The game uses three main technologies:

- Langflow for AI question generation and RAG implementation

- Astra DB for storing and retrieving movie content and questions

- PartyKit for real-time multiplayer functionality

Question generation system

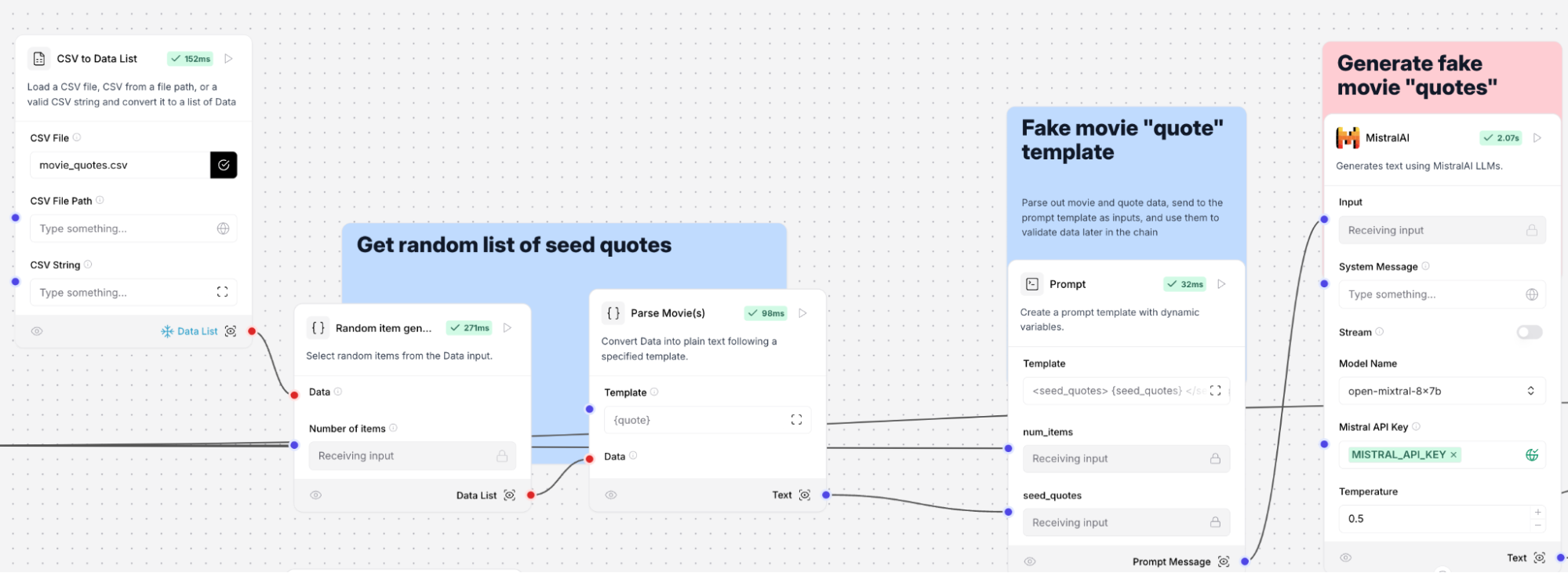

We implemented a RAG (retrieval-augmented generation) system using Langflow to generate movie-related questions. The process involves reading a CSV file containing movie names and their quotes and pulling random items to generate not just contextually accurate movie quotes, but also some very believable red herrings.

Multiplayer architecture

The game uses a client-server architecture where a central server maintains the true game state. This ensures:

- Fair gameplay across all players

- Synchronized question delivery

- Real-time score updates

- Player state management

We built this with PartyKit because it brought us the ability to iterate rapidly. The game took 2 weeks from zero to MVP (after which we continued to refine it).

Game flow

- Room creation - Players join dedicated game rooms, similar to a traditional lobby system. A room can contain up to four teams of four players for a total of 16 players.

- Question generation - The system pulls movie content from a large CSV and generates questions using our Langflow pipeline.

- Real-time interaction - Players are given a movie quote and each player on each team has one of the potential answers. The answer could be a real movie, or the quote could have been AI generated. They collaborate on the same team, and play against other teams.

- Score tracking - The server tracks both correct answers and how quickly each team answers.

At the end, the teams that answer the most questions correctly the fastest wins.

Technical implementation

This game has many moving parts, as you can imagine, but for the purposes of this post, we’ll focus on just a few. Feel free to check out the source code on GitHub for more details.

AI question pipeline

We needed two pipelines to create the question for the game:

- The first one took real movie quotes and generated alternative movies that could have been the answer.

- In the other, we generated fake movie quotes and the movies that could have spawned those quotes.

The former was relatively straightforward, but generating fake movie quotes that sounded real was much more of a challenge.

It was super important that AI generated quotes were close enough to real movie quotes and believable as real quotes in order to trick players into thinking they were actually real. This interaction forms the heart of the game. If generated quotes are too obvious, it ruins the challenge of the game. By introducing AI generated quotes into play, we inject a level of suspense, uncertainty, and intrigue among players.

We experimented with multiple LLMs and providers ranging across Groq, OpenAI, Mistral, and Anthropic. This was an area where Langflow really shined, allowing us to connect and test out new models in seconds without having to change our application logic while performing test runs.

We landed on MistralAI’s open-mixtral-8x7b model using a temperature of 0.5 (for some creativity, but not too much) and seeded it with real movie quotes to provide the best results.

It turns out we required a bit of finesse between generating quotes like "You can't handle the truth, or this spicy taco" (fake) or "If you're yearning for chaos, my friend, chaos we shall have" (also fake). For some reason, we found that multiple LLMs really wanted to include food references. Once we dialed the settings in, the output was solid and the game became a lot more fun.

Multiplayer coordination and device orientation

For a multiplayer game, Cloudflare Durable Objects are a very good primitive: each room is essentially a Durable Object that is provisioned at one of Cloudflare’s points of presence near wherever the game is initiated. This provides automatic load balancing at production scale.

Here’s a preview of our single file that runs all the coordination:

export default class Server {

constructor(room) {

// Initialize some initial state

this.timeRemainingInterval = null;

this.roundCheckerInterval = setInterval(() => {

this.checkRound();

}, 1000);

this.isNextRoundQueued = false;

this.isGameStartedQueued = false;

this.state = structuredClone(initialState);

this.gameOptions = structuredClone(defaultGameOptions);

}

async onRequest(request) {

// Process any requests over HTTP

}

onMessage = async (message, sender) => {

const data = JSON.parse(message);

// Get actions and respond to them (strongly typed)

switch (data.type) {

case "getState":

return;

case "joinTeam":

return;

case "leaveTeam":

return;

case "startGame":

return;

case "updatePhonePosition":

return;

case "rejectOption":

case "acceptOption":

return;

case "nextQuote":

return;

case "resetGame":

return;

default:

console.log("Unknown message type", data);

}

};

}For the sake of brevity, we’ve left out some implementation, but this outline gives you the gist of how we implemented the multiplayer feature. It’s all contained here. We were very impressed with PartyKit because as soon as we filled this in and hit partykit dev, we had real-time multiplayer infrastructure—instantly.

Lessons learned

Building this game was a great experience around building production-grade software with generative AI. To finish this, here are some lessons we’ve learned.

- Perceived effort for GenAI can be misleading - We’ve seen time and time again: the initial effort for GenAI applications can happen very fast, giving the impression that work is almost complete within hours or days, but the “last 20%” of work may actually take the most effort. In UnReel’s case, ~95% of the GenAI logic and flow was completed within a day or so, but tweaking LLMs, temperature, and proper seed data to really nail the output we were looking for took the most effort overall. Ensure time estimates take this into account.

- Generating more results in a single step may be more efficient than asking LLMs for singular responses - LLMs, by their nature and depending on the task at hand, can easily take multiple seconds to return a response. Since UnReel required multiple questions (usually 10 in our case) for teams to answer, it was actually faster to ask the LLMs to return all 10 at once instead of generating a movie quote in real-time for each question.

- UI state is almost always better derived from server state - One important lesson we learned here was to never share state between server and client for these kinds of applications. Instead, the server contains all the state, and the UI derives it. If the UI cannot derive its state from server state, the state is likely improperly designed.

Without a single source of unidirectional state, our game was often in a very mixed and confusing state that we never intended it to be in. Using a single source of state, we were able to derive UIs for multiple different screens, including the game play, an admin, and a leaderboard. They all looked and acted differently, but remained perfectly in sync. - Idempotency is king - With distributed systems and coordinating across so many concurrent sessions that are supposed to have identical states, we often ran into situations where a score would go from 24 to 23,085 when it was supposed to go to 32.

The reason for this was that user devices sometimes sent many of the same events to be processed as opposed to a single one. Creating behavior that is unaffected by identical repeat events was crucial in building UnReel.

Check it out

Building this game, we learned a ton about Astra DB, Langflow, and PartyKit, and we gathered quite a bit of feedback that has already been actioned. Should you wish to try your hand at building with Astra DB or Langflow, feel free to sign up and give them a try.