Moving data structures off of the Java heap to native memory is important to keep up with datasets that continue to grow, while the JVM stays stuck at heap sizes of about 8GB. As of Cassandra 2.0, there are two major pieces of the storage engine that still depend on the JVM heap: memtables and the key cache.

Cassandra 2.1 addresses the larger of these, memtables. Memtables are not moved entirely off heap, but two options are provided to reduce their footprint in the new memtable_allocation_type configuration option:

- offheap_buffers moves the cell name and value to DirectBuffer objects. This has the lowest impact on reads -- the values are still "live" Java buffers -- but only reduces heap significantly when you are storing large strings or blobs.

- offheap_objects moves the entire cell off heap, leaving only the NativeCell reference containing a pointer to the native (off-heap) data. This makes it effective for small values like ints or uuids as well, at the cost of having to copy it back on-heap temporarily when reading from it.

The default behavior is unchanged for 2.1 (heap_buffers). We anticipate changing the default to offheap_objects for 3.0.

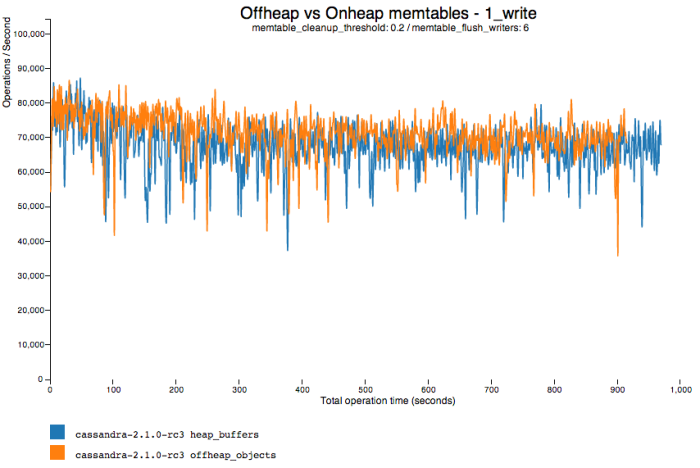

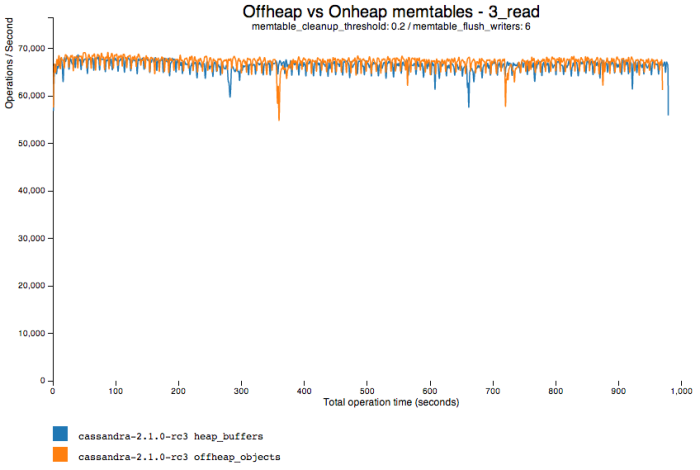

Let's look at how this plays out in the lab:

Writes are about 5% faster with offheap_objects enabled, primarily because Cassandra doesn't need to flush as frequently. Bigger sstables means less compaction is needed.

Reads are dead even within the accuracy of the benchmark, but this is a best-case scenario for offheap_objects: most of the reads are coming from sstables on disk, not unflushed data in memtables. We have a ticket open to add profiles to cassandra-stress that will work the memtables much harder. Stay tuned!