Running Kong on Kubernetes with DataStax Astra

Kong is a plugin-based, highly customizable, open-source API gateway. It seamlessly handles policy application and request enrichment across monolith applications, microservices, and serverless functions. This includes simple authentication, request rewriting, and rate-limiting to instrumentation for observability and mutual TLS application. Since its release in 2015 Kong has supported Apache Cassandra as a backing data store for Kong. Cassandra is exposed as storage for plugins, core Kong configuration, and components. Kong and Cassandra are quite the pair as both technologies scale horizontally and provide high availability across multiple failure domains.

Given the power of this dynamic duo, we wanted to take Kong for a spin with DataStax Astra. For the uninitiated, DataStax Astra is a cloud backed, hands-off, managed Cassandra as a service offering. DataStax handles all of the operational aspects of managing your Cassandra cluster. Should you be running in a single region or in an advanced Kong configuration on hybrid or multi-cloud, Astra is an excellent choice as the configuration and plugin information is available from each location in a highly available scalable environment.

To get things started we simply tried to point Kong at Astra. This proved to be a larger task as Astra leverages TLS for client connections through an external endpoint. In customer applications, we handle this with our drivers by leveraging certificate and key information (usually in a zip file). Unfortunately, this functionality was not supported by the driver in use by Kong, lua-cassandra. Not to be deterred our team took the Lua plunge and added support for proxy connections and certificates to this driver as well as the new configuration options in Kong. With the addition of ~65 lines of Lua, Kong is now talking to Cassandra hosted on Astra.

We have opened pull requests to the upstream projects Kong and lua-cassandra projects so others can take advantage of these changes. For those that want to take it for a spin on Kubernetes now, check out our Helm repository for a simple installation and follow the directions below.

-

Validate your kubectl is connected to your cluster. We are using a GKE cluster on GCP for this example.

-

Install Helm 2

-

Install our repo with

helm repo add datastax-examples-kong https://datastax-examples.github.io/kong-charts/ -

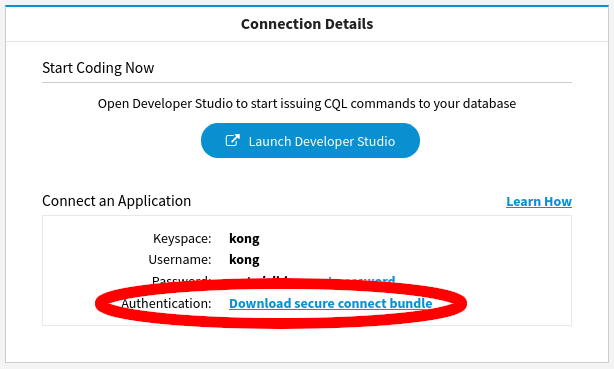

Spin up a DataStax Astra cluster, note the username, password, and keyspace name

-

Download the Secure Connect Bundle zip file and extract it

-

Note the endpoint and port from the

cqlshrcfile then collect theca.cert,cert, andkeyfiles into a directory called `secure-connect` -

Deploy Kong with our Helm chart

# Set environment variables to be used during helm install

ASTRA_PROXY_URL="CLUSTERID-REGION.db.astra.datastax.com" # hostname from cqlshrc in secure-connect bundle

ASTRA_PORT="3xxxx" # port from cqlshrc in secure-connect bundle

ASTRA_KEYSPACE="your_keyspace"

ASTRA_USERNAME="your_username"

ASTRA_PASSWORD="your_password"kubectl create namespace kong

kubectl create configmap -n kong kong-cassandra-cm --from-file secure-connect/helm install datastax-examples-kong/kong -n kong \

--namespace kong \

--set env.database=cassandra \

--set env.cassandra_contact_points=$ASTRA_PROXY_URL \

--set env.cassandra_use_proxy=true \

--set env.cassandra_cert=/etc/nginx/secure-connect/cert \

--set env.cassandra_cafile=/etc/nginx/secure-connect/ca.crt \

--set env.cassandra_port=$ASTRA_PORT \

--set env.cassandra_ssl=true \

--set env.cassandra_ssl_verify=true \

--set env.cassandra_keyspace=$ASTRA_KEYSPACE \

--set env.cassandra_username=$ASTRA_USERNAME \

--set env.cassandra_password=$ASTRA_PASSWORD \

--set env.cassandra_consistency=LOCAL_QUORUM \

--set image.repository=datastaxlabs/astra-kong \

--set image.tag=v2.0.2 \

--set admin.enabled=true \

--set admin.http.enabled=true

From here Helm installs Kong on our Kubernetes cluster while setting the appropriate configuration values. Checking out the deployment with kubectl we will see:

kubectl get pods -n kong

NAME READY STATUS RESTARTS AGE

kong-kong-75cf466b88-79bbt 2/2 Running 2 12m

kong-kong-init-migrations-t64kx 0/1 Completed 0 11m

Validation that Kong is actually working requires a couple of commands to the admin API.

# Kong Admin test

kubectl port-forward -n kong svc/kong-kong-admin 8001:8001 & # Wait a second while the port forwarding comes online

curl http://localhost:8001/ | jq .

## Create a service pointing to mockbin.org

curl -i -X POST \

--url http://localhost:8001/services/ \

--data 'name=example-service' \

--data 'url=http://mockbin.org'

## Add a route connecting requests from example.com to our mockbin.org service

curl -i -X POST \

--url http://localhost:8001/services/example-service/routes \

--data 'hosts[]=example.com'

## Perform a request against the external IP of the Kong proxy with the header Host: example.com to match against our service

curl -i -X GET \

--url http://`kubectl get svc kong-kong-proxy -n kong | tail -n 1 | awk '{print $4}'`/bin/4bdd1415-1e7e-4713-8f5c-272a9af4858b \

--header 'Host: example.com' \

--header 'Accept: application/json'

If everything goes according to plan the requests we submit will show headers indicating Kong is processing the requests. We are now running with Kong on Kubernetes backed by the power of Astra! Check out the Kong documentation for examples of how to use Kong and rest assured the management of Cassandra is handled for you by the professionals at DataStax while you can focus on deploying your applications.